Public dialogue on the use of data by the public sector in Scotland

This report presents the findings from a public dialogue on the use of data in Scotland commissioned by the Scottish Government to explore the ethics of data-led projects. The purpose of the panel was to inform approaches to data use by the Scottish Government and public sector agencies in Scotland.

Appendix E: Q&A document

About this document

As part of the panel process, members have the opportunity to ask the speakers questions about their presentations. Some questions are addressed during the session and any remaining questions are collated in this Q&A document which is shared with the relevant speakers and the Data & Intelligence Network for response. A glossary of terms is also provided to help with some of the more technical language used throughout the panel.

The questions are organised by session and by theme. The document is available to panel members at any stage of the process.

Glossary of terms

Algorithm governance - the use of algorithms and artificial intelligence in governance. Understanding the social implications of artificial intelligence, big data, and automated decision-making on society gives rise to concerns of transparency and accountability, among other ethical issues.

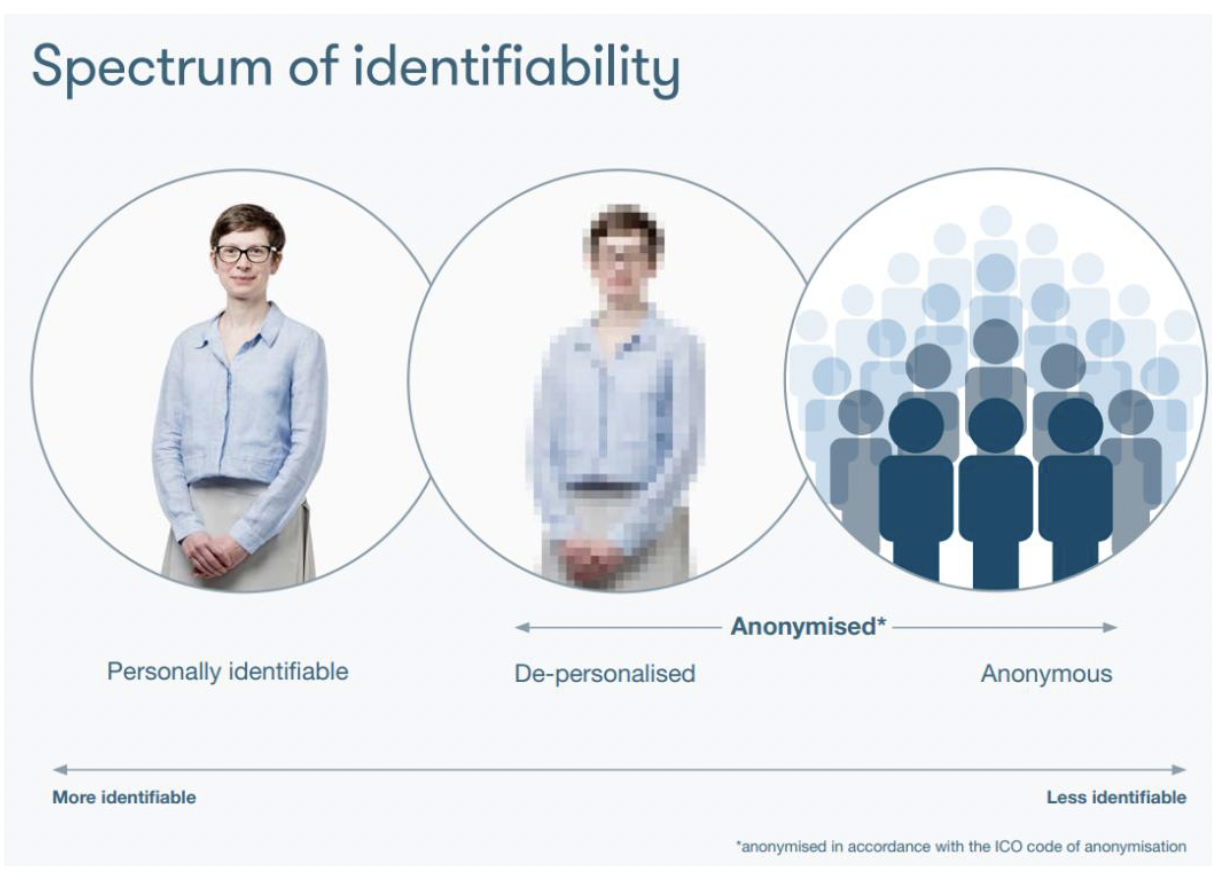

Anonymisation of data – the process used to prevent someone's personal identity from being revealed in a given set of data. The technical language of identifiability is complex. Many different words are used to describe the same thing, and many of those words are unnecessarily technical (for example pseudonymised, key-coded, de-identified for limited disclosure). It is important to explain clearly what it means when information is ‘anonymised’ and what the likelihood of re-identification is when using different types of data. The picture below tries to explain this, and you can read more about it here.

(Source: Understanding Patient Data)

Communities of practice - groups of people who share a concern or a passion for something they do and learn how to do it better as they interact regularly. In this case, the Network supports communities of practice on the ethical use of citizens’ data.

Constitutional privacy – or “decisional privacy” refers to the freedom to make one’s own decisions without interference by others in regard to matters seen as intimate and personal. This is linked with informational privacy (see below).

Contextual integrity - requires that information gathering and sharing be appropriate to that context. An example of this can be restrictions on communication of patient information outside the healthcare context.

Contestation – refers to the ability to challenge outcomes determined by automated processes in governance. This is necessary to protect rights, ensure accountability and enhance public trust

Data ethics - the benefits, risks and wider social harms that should be considered when thinking about how data is used, such as when used by Network members for different types of projects.

Data justice – fairness in how people are treated, represented, and ‘seen’ by virtue of data processing. In large scale data use it is important to consider how that data might lead to bias or discrimination against groups of people.

Data & Intelligence Network – The DIN is a collaboration made up of members across the Scottish Public and Not-for-Profit Sectors, including Health Boards/Agencies, Local Authorities, Academia and third sector. The DIN is led by dedicated team of Scottish Government staff who actively work with members of the network to help deliver projects and support communities of practice. Throughout the panel it might be referred to as the DIN or the Network.

Data Protection Act – controls how personal information can be used and your rights to ask for information about yourself. Under the Data Protection Act 2018, you have the right to find out what information the government and other organisations store about you. Together with the UK GDPR, this forms the UK’s data protection legal framework.

Data Protection Impact Assessment (DPIA) - a process to help organisations to identify and minimise the data protection risks of a project.

Data Controller – the data controller determines the purposes for which and the manner in which personal data is processed. It can do this either on its own or jointly or in common with other organisations. This means that the data controller exercises overall control over the 'why' and the 'how' of a data processing activity.

Data minimisation - requires that the collection of personal information be limited to what is directly relevant and necessary to accomplish a specified purpose. Data should also be retained only for as long as is necessary to fulfil that purpose.

Data Processor - act on behalf of, and only on the instructions of, the relevant controller.

“Five Safes” - a set of principles which enable data services to provide safe research access to data. You can read more about the five safes here. The principles are:

- Safe data: data is treated to protect any confidentiality concerns.

- Safe projects: research projects are approved by data owners for the public good.

- Safe people: researchers are trained and authorised to use data safely.

- Safe settings: a SecureLab environment prevents unauthorised use.

- Safe outputs: screened and approved outputs that are non-disclosive.

Information privacy – the control over one’s information because an individual’s choices can be influenced on the basis of information about them or others like them.

Informed consent - under the General Data Protection Regulation (GDPR), “for consent to be informed and specific, the data subject must at least be notified about the controller’s identity, what kind of data will be processed, how it will be used and the purpose of the processing operations.” From a privacy perspective, principles of informed consent require that the consent must be unambiguous, specific, informed and freely given.

Group privacy - refers to the collective interest in privacy and is concerned with the use of information and inferences drawn at a group rather than individual level. Collective interest in privacy arises out of the use of information concerning one member of a group to undermine the autonomy of other members of that group.

UK GDPR – this is our version of the EU’s General Data Protection Regulation (GDPR) and it controls how your personal information is used by organisations, businesses or the government. Together with the Data Protection Act, this forms the UK’s data protection legal framework.

Non-anonymised data - data which does contain identifiable information (e.g. name, address).

Precautionary principle – has its origin in Environment law and “enables decision-makers to adopt precautionary measures when scientific evidence about an environmental or human health hazard is uncertain and the stakes are high”. For the purpose of this public panel, it refers to the caution that needs to be exercised in using automated technologies in governance and designing safeguards to protect human rights.

Pseudonymised data – a technique that replaces or removes information in a data set that identifies an individual.

Safe haven - a secure place used to store particular research data, for access exclusively by approved colleagues. Strict safeguards control who can access medical and personal data for research. When researchers use this data, they must use IT systems with very high standards of security.

Quantitative data – data in the form of counts or numbers where each data set has a unique numerical value.

Qualitative data – information that cannot be counted, measured or easily expressed using numbers. It is collected from things like text, audio or images.

Session one: introduction

The speakers

- Scottish Government - introduction to the Data & Intelligence Network

- Nayha Sethi, University of Edinburgh - introduction to data, data ethics and data justice

- Stephen Peacock, Information Commissioners Office – introduction to data protection

Questions raised during session one :

The Data & Intelligence Network

Questions (Q) and Responses (R). All responses provided by the DIN.

Q - If the DIN is trying to pull together a coherent platform, how do they separate trend data from identifiable data?

- R- The DIN does not provide a platform nor does it host data, so it has no need to separate trend data from identifiable data.

Q - Where does the DIN fit within the public sector – are they part of the Scottish Government? Are any private sector organisations involved in their work?

- R - The DIN is a collaboration made up of members across the Scottish Public and Not-for-Profit Sectors , including Health Boards/Agencies, Local Authorities, Academia and third sector. The DIN is led by dedicated team of Scottish Government staff who actively work with members of the network to help deliver projects, support communities of practice etc. Some services delivered by DIN members are supported by private organisations, and therefore there are occasions where the DIN engage with non-public sector bodies.

Q - The DIN work with quite a lot of different partners. What controls are in place to make sure data is processed/shared securely and appropriately (e.g. that data only used for specified purpose)?

- R - Projects go through an assessment process including an initiation document and, where appropriate, ethical workbook that helps identify and specify how data would be processed/shared securely and appropriately. Compliance with data protection requirements and other applicable legal frameworks as well as guidance from the Information Commissioner Office (e.g. Data Protection Impact Assessments, Data Sharing Agreements and Data Processing Agreements), is the responsibility of the individual organisations involved in the project. The Scottish Government support team provides expertise and support to ensure the appropriate checks are in place.

Q - Could the DIN share some examples of the ethical considerations they have to make re. accessing data. And where is the proof that data is being used ethically?

- R - At the end of the project, the close out process will review whether the outputs including controls over the use of data have been successfully implemented.

Examples of ethical considerations the DIN members have taken in the past will be presented in workshops 2 and 3.

Q - At what level/who makes decisions about the ethical use of data?

- R - Decisions about the ethical use of data are taken at several levels. The first level is within member organisations themselves, when projects are proposed, or as issues or problems in a project emerge a first ethical assessment may take place. Should the DIN become involved we would work with members by going through the DIN ethical workbook to identify ethical concerns and determine how best to address these with the ultimate decision on how data should be used staying with the organisations sharing the data.

Going forward we envisage the public panel will help us by identifying overarching ethical principles reflecting the wider public’s perspective so these are included in the decisions we make more about data use in Scotland, including the activities of the DIN.

Q - Are the public able to find out how DIN members are using their data and specifically what data are being accessed/used?

- R - Each member organisation will have different channels and platforms to publicise their data led projects. Additionally, when the SG support team agrees to support projects it will aim to be as transparent and open as possible about these projects through its newsletter, blogs etc.

Q - If there is a data breach what steps are in place to recover any leaked, personal data? And is it possible to fully recover it once it’s ‘out there’?

- R - The DIN doesn’t hold any personal data. As indicated above, it is a responsibility of each individual member organisation to comply with data protection requirements and other applicable legal framework as well as guidance from the Information Commissioner Office.

Q - How are decisions made about what kind of data is shared with which kind of organisations? And does that create a possible conflict of interest between different members?

- R - When DIN members propose sharing data as part of a DIN project we will work through the proposals, highlight potential ethical considerations etc. We would also recommend research into whether a similar solution exists and whether the new project is the best solution and does not conflict with the aims and objectives of another member organisation. In general sharing between members is by agreement.

Q - How many people have access to our data, and how is this monitored?

- R - The DIN does not hold any data about data about individual (except for contact details of network members) and therefore does not monitor who accesses it. In projects that the SG Support Team helps deliver, the use of Data Privacy Impact Assessments (DPIA) and the DIN ethics workbook do ask project sponsors to indicate how many people would have access to data and how access is controlled/managed. Data access remains a responsibility of individual member organisations in compliance with data protection requirements and applicable legal frameworks as well as guidance from the Information Commissioner Office

Q - How can data be protected in transfer – especially (but not only) between private and public sectors? What role does encryption play?

- R - In every project we get involved in and support, we ensure data is held and transferred in a safe and secure way using recognised national and international standards.

Encryption is not always the best solution when transferring data. Encryption like other security tools needs to be used proportionately, and if used, should be as part of a well-structured data security solution. DIN members are best placed to agree their data sharing security needs with organisations they share data with.

Q - How does the government / public sector use AI in relation to data?

- R - The Scottish Government (SG) does not have any generic AI-specific internal policies and guidelines. However, we adhere to AI regulatory and policy frameworks already in place. Any public body in Scotland have to adhere to the Information Commissioner's Office (ICO) GDPR legal requirements of data.

They also need to take into consideration the ICO’s Guidance on AI and data protection. SG also adopts the high level principles within the Scotland’s AI Strategy and recommends any other public body to do so.

SG and public bodies in Scotland also collaborate with Centre for Data Ethics and Innovation (CDEI) to ensure that ethical considerations and the values that citizens want are reflected in governance and policy frameworks on the use of AI.

Q - Do different organisations (in the DIN) all follow the same safety standards – for example regarding internet security, or ethics?

- R - In every project we get involved in and support, we ensure data is held and transferred in a safe and secure way using recognised national and international standards. As regards ethics, there may be a first ethics assessment within member organisations themselves when projects are proposed, or as issues or problems in a project emerge. This initial assessment will be typically conducted in line with any ethics framework or guidance that organisations may have in place. Data ethics considerations are not widely applied yet.

Q - If some companies aren’t always pseudonymising or anonymising data properly, how can government improve this situation?

- R - When we get involved and support a project we ensure that members organisation comply with Information Commissioner Officer guidance on anonymisation.

Q - If GDPR and DPA set policy, what is our role in this public panel?

- R - We envisage the public panel will help us by identifying overarching ethical principles that reflects the wider public’s perspective. These will be included in the decisions we make more about data use in Scotland which are not covered by legislation and regulations, including the activities of the DIN.

Data ethics

Q - If data sharing should be done 'to serve mankind', who decides what mankind is?

- R - University of Edinburgh response: Many justifications for using, collecting and sharing data hinge on the diverse benefits that data use can deliver. Concepts such as 'public interest', 'public benefit' and 'common good' play a key role in decisions about whether to authorise access to data not only in terms of ethical review of data use, but also, for example, with regards to setting aside legal requirements for seeking consent or anonymising data.

Each of these terms will be used in different ways depending on the context, but one thing they have in common is that they all appeal to notions of the common good, benefit, welfare or well-being of society. An important ethical question is indeed who gets do decide what constitutes public interest or public benefit. This might vary depending on a variety of factors including: the organisation holding the data, the type of data in question, the purpose for data sharing and who will be accessing it. Many organisations that hold health and care data will make assessments about public benefit as well as decision-makers across for example, University ethics committees, research ethics committees, public benefit and privacy panels or data oversight groups. These committees or groups will be comprised of a variety of individuals with different expertise, skills and interests.

Further Resources: In their recent report Data for Public Benefit (2018) the Understanding Patient Data initiative held a series of workshops to understand what 'public benefit' meant in the context of data sharing involving personal data. More recently and in collaboration with the UK National Data Guardian, the initiative has released their report Putting Good into Practice: A public dialogue on making public benefit assessments when using health and care data (2021). The report is quite long but it is worthwhile reading the executive summary (particularly p 1 - 5).

- R - ICO response: I assume this question is who decides what serves mankind? This is a quote from a recital which is to be read alongside Article 1 of the GDPR. The recitals set out that the right to data protection is a fundamental right but not an absolute one, the right to data protection must be balanced against other fundamental rights. To comply with data protection law organisations must be able to demonstrate that their use of personal data is necessary and proportionate and does not result in a high risk to rights and freedoms. When deciding whether to share data organisations must weigh up the risks of not sharing against the risks of sharing. The ICO as the UK regulator of data protection can take regulatory action where data protection law has not been complied with the courts the final arbiter.

Data protection

Questions (Q) and Responses (R). All responses provided by the ICO.

Q - If data is shared for research purposes: Is it de-identified? And have people consented to their data being shared for this purpose?

- R - The short answer is it depends!

Is it de-identified?

Data protection law says that: You should only process personal data that adequate relevant and limited to what is necessary for your purpose.

In some cases it will be possible to conduct the research using entirely anonymous data (in other words data that is no longer personal data because key identifiers have been removed so that individuals are no longer identifiable or the chances of identifying any individual is sufficiently remote).

For other research it may be necessary to retain some identifiers so, for example, different data sets can be combined (linked) e.g. information about your health that might be held by the NHS with information about your education which may be held elsewhere) or if you are tracking an individual’s progress over time. Where this is the case the researchers can add a layer of protection called pseudonymisation (or de-identification). This would be where an identifier like someone’s name is replaced with a reference number. Pseudonymisation means that people are not identifiable from the dataset itself. However, they are still identifiable by referring to other, separately held information. This gives individuals a layer of privacy and reduces risk of harm whilst not obstructing the research.

ICO guidance says that where personal data is being used for research purposes researchers should: ensure that you do anonymisation or pseudonymisation at the earliest possible opportunity, ideally prior to using the data for research purposes.

Have people consented to their data being shared for this purpose?

This depends upon the research being conducted. Data protection law recognises the importance of scientific and historical research to society and through a set of provisions called the research provisions. These allow data collected for one purpose to be shared for research purposes without the need to collect fresh consent or a specific legal requiring or allowing the sharing provided certain safeguards are in place. This includes suitable pseudonymisation.

Q - Will there be changes/deregulation of data protection rules in the UK as a result of Brexit?

- R- The Data Protection and Digital Information Bill was introduced to Parliament on 18 July 2022. You can read the Bill here. If this becomes law it will make some changes to the current data protection law. The proposals are for a more flexible, outcomes-focused regime that supports responsible data use and innovation while still protecting individuals’ rights.

Yesterday (03 October 2022) at the Conservative Party Conference, Michelle Donelan, Secretary of State for Digital, Culture, Media and Sport announced her approach to data protection reform which includes introducing a new UK data protection framework. It is not clear at present what changes will be made to the existing proposals.

Q - How do you protect people’s privacy when people are working from home? Is there greater risk of breaches as a result of this?

- R- Working at home may present new or different risks from office based. Employers should have measures and policies in place however to ensure that these risks are managed and reduced and that sufficient protections are in place and data protection law is complied with. At the beginning of the pandemic we published some guidance for employers on this: Working from home | ICO

Q- What is the ICO’s audit process?

- R- ICO audits assess whether an organisation is following good data protection practice and meeting its data protection obligations. Following an audit, a report will be produced that sets out recommendations for improvement. The ICO takes a risk based approach to identifying which organisations it audits. We focus on those areas we feel we will have the biggest impact and organisations who would benefit the most from an independent assessment of their compliance with data protection legislation. More information on the process can be found here: A guide to ICO audits

Q - How is special category data used differently and why is it therefore defined as special category?

- R - Special category data includes:

- personal data revealing racial or ethnic origin;

- personal data revealing political opinions;

- personal data revealing religious or philosophical beliefs;

- personal data revealing trade union membership;

- genetic data;

- biometric data (where used for identification purposes);

- data concerning health;

- data concerning a person’s sex life; and

- data concerning a person’s sexual orientation.

It is not that special category data is used differently that means it is defined as special category. Rather it is because data protection law gives it greater protections. This is because use of this data could create significant risks to the individual’s fundamental rights and freedoms. For example, the various categories are closely linked with:

- freedom of thought, conscience and religion;

- freedom of expression;

- freedom of assembly and association;

- the right to bodily integrity;

- the right to respect for private and family life; or

- freedom from discrimination.

The panel process

Q - Can we see the presentations in advance to help us get our head around the material before we meet?

- R (Ipsos) - thank you for this suggestion and it’s a great idea. We will look at doing this for future sessions.

Session Two: Past Projects (pt 1)

The speakers

- Scottish Government – the shielding list project

- Scottish Government – the CURL project

Questions raised during session two :

The shielding list project

Questions (Q) and Responses (R). All responses provided by Scottish Government.

Q - What’s happening with shielding data now? Is it being kept? How long for? Is it being updated and how?

- R - Use of the shielding list (also known as highest risk list) ended on 31st May 2022. It will be kept in line with NSS retention policies but will not be amended or updated. Whilst the data on individuals who were on shielding lists is not being updated the underlying data on health conditions of individuals will be updated on GP systems.

Q - What other third parties (apart from employers) received the shielding data?

- R - We are not aware of any other third parties receiving or accessing the shielding data.

Q - What happens if data is missing for an individual?

- R - When it was operational It would depend on many factors: What type of information was missing? When was the missing data identified? Who needed to be contacted to get the correct information etc.? However as the shielding list is no longer being updated any missing data will not be changed as it provides a record that data was missing.

The CURL project

Questions (Q) and Responses (R). All responses provided by Scottish Government.

Q - Were any private sector organisations involved in handling the data?

- R - No. There are no private companies or organisations involved in handling or processing the CURL data.

Q - Why wasn’t electoral roll data used to update the addresses?

- R - The underlying law (Representation of the People (Scotland) Regulations 2001) that requires the Electoral Register (roll) to be created doesn’t allow the register to be used for anything other than organising and running elections and a very limited range of other Local Authority activities.

Q - What is the main purpose of CURL? Are there any particular problems you feel CURL would be a good solution for?

- R - CURL was developed to understand where discharged hospital patients were transferred to and to understand COVID-19 testing in Care Homes. CURL has also been used to understand at the geographic spread of outbreaks and vaccine take-up across Scotland. CURL could be used to, more generally, look at the spread of different types of health conditions and how they relate to environmental factors, housing conditions and other deprivation indicators.

Wider ethics and the role of Network

Questions (Q) and Responses (R). All responses provided by Scottish Government.

Q - Who has the ultimate say on how data is handled and used, particularly with regards to ethical decisions?

- R - The Data Owner and the Data Access Authority control who can access personal data, how it is accessed and specify the security features the computer environment that needs to have. Ethical checks should be carried out by the organisation the person wanting to access the data works in. For example, university researchers follow their internal ethics approval process, while Scottish Government statisticians use the UK Statistics Authority process. The DIN has an Ethics Workbook that all the Network members requesting our help should fill in. We would like to ask you, the citizens, who do you think should review the Ethics Workbook.

Q - Will I be told if my data is involved in a data breach?

- R - When the risk is considered high risk, all individuals must be told. The Data Protection Act 2028 requires this.

Q - Do public sector bodies get fined like private sector ones do?

- R - Yes

Q - Is there ever compensation for people whose data has been compromised?

- R - That would be a matter for a court or maybe the Information Commissioner’s Office (ICO).

Q - Who do you turn to if data is mishandled?

- R - The organisation Data Protection Officer (DPO), the organisations Senior Information Risk Officer (SIRO) and ultimately the ICO.

Q - What are the actual benefits for society of analysing anonymised data?

- R - This is a very big question! Briefly, by letting Public Sector and university researchers use anonymised, real data about individuals, they can get a true understanding of how people go about their lives and how this could be affected by different policy decisions. We can also use research findings to help colleagues design better policies. Because the data is often routine information that public sector organisations already collect, it is less intrusive and costly than collecting the same information by other means, such as through large-scale surveys. It allows entire populations, or specific parts of the population to be studied, reducing common problems with gaps in data often encountered in surveys.

Q - What’s the step by step process for ethical approval of a project? How do you decide on a project when there’s no obvious right or wrong position?

- R - At the moment, all Network members requesting our help should fill in the Ethics Workbook which explores the risks and benefits of the project at different levels. We do not yet have a formal process for assessing the completed Ethics Workbooks, and would like to ask you what you think this process should look like from the citizens’ perspective.

Q - As we review all these projects, what should we be worried about? What are other people worrying about when it comes to data being used in these ways?

- R - In reviewing projects we would suggest, amongst other factors, that panel members think about whether the benefits of a project to individuals or the wider community outweigh any risks. As the nature and scale of the projects vary so much there will be a range of worries and concerns. By bringing their own experiences and concerns Panel members will help projects see the “bigger picture” and reflect on wider concerns.

Session Three: Past Projects (pt 2)

The speakers

- Duncan Buchanan, Research Data Scotland – the equalities and protected characteristics project

- Scottish Government – the Ukrainian Displaced People project

- Anuj Puri – an outside perspective: reflections on the ethical issues

Questions raised during session three :

Equalities project

Questions (Q) and Responses (R). All responses from Research Data Scotland.

Q - How do the Scottish Government keep the data collected (i.e. from the Census) up to date with more recent data? And how do they ensure they do this correctly?

- R - The census is collected and stored separately as a one off exercise every 10 years. To use it along with other more up to date data sources, like from the NHS, requires the quite complex data linkage work I described in my presentation. Once linked together, you can find the most up to date records for individuals. However to keep this exercise up to date requires a plan to continually ‘refresh’ this data linkage at regular intervals, say every 3 months or every year. We haven’t got that plan yet having just completed the initial data linkage work. But it’s something we need to consider once we can demonstrate the value, and security, of having the up to date equalities all together in one place. (Response provided by Research Data Scotland)

Q - In what ways will the data collected be used now, and how is that decided?

- R - The first step is to run a so called ‘proof of concept’ project where the dataset is used by one public sector organisation to analyse equalities data for real. We have a couple of organisations interested but are still arrange something that can be done fairly soon and is of pressing need. This may run smooth or may highlight further improvements needed. Further to this we need to consult with public bodies and researchers on whether it meets their needs for equalities duties and monitoring. If successful we need to return to the independent scrutiny panels (one for NHS data, one for government data) with a plan for how, and to whom, the dataset will made available in future. However, the basic model will follow the model currently in operation for access to sensitive public sector data for research. That model involves each project applying for access and being assessed based on the 5 safe’s framework used across UK: safe people, safe projects, safe settings, safe outputs, safe data. This normally is done by the independent scrutiny panels. (Response provided by Research Data Scotland)

Q - To what extent does anonymity, or pseudonymisation, of data compromise data quality?

- R - It does not affect data quality of the characteristics (like age, ethnicity or religion) directly. The data quality issues can arise from the process of anonymisation which involves attempting to identify the same individual across different datasets and systems, e.g. census, or school, or NHS. Because this relies on personal information like names, addresses, date of birth etc, any variation in how these are recorded across systems (e.g. mistakes, spelling differences) increases risk you can’t identify the same person appearing in different systems. So individuals could get missed or are assumed to be 2 different people. So when you anonymise them and remove personal information there’s no way of knowing they could be the same people or that some people are not appearing. There is always a percentage in this bracket given the numbers involved but it’s usually pretty low. Lots of in-depth academic work has been done on developing the algorithms used in data linkage methods like this but it’s definitely something that people using the data need to be aware of when doing their analysis on the anonymised data. (Response provided by Research Data Scotland)

Ukrainian Displaced People project

Questions (Q) and Responses (R). All responses from Scottish Government.

Q - What differences are there between Scotland and other UK nations re. how approach data on Ukrainian refugees and hosts?

- R - Immigration is not devolved, so all visa applications are processed by the Home Office following UK Gov policies. A UK wide system exists but Scotland and Wales decided not to use it as it would mean being closely coupled with all UK policy. Scotland and Wales both implemented a ‘super sponsor’ scheme where the government is the visa sponsor and is responsible for matching the displaced person(s) with temporary accommodation. This has been the main policy difference. The approaches to data is similar across all 4 nations as local authorities are responsible for housing and child services throughout the UK. A difference in Scotland was the decision to use the NHS NSS call centre for initial contact with people applying under the super sponsor scheme. This was already in place for dealing with COVID and was reused to enable Scotland to contact large numbers of people with immediate effect.

Q - Who has access to data on hosts/refugees? Which delivery agencies/parts of the council?

- R - NHS NSS Call Centre get visa data for super sponsor scheme only.

Local Authority Housing Departments get visa data for private sponsor scheme only and host data to carry out property checks and background checks on hosts.

Local Authority Child Services get visa data for super sponsor and private sponsor schemes long with host data in order to carry out safeguarding checks where minors are involved.

COSLA, Local Authority Housing Department and Scottish Government Matching Team also receive augmented data from the previous steps with additional data that helps them match displaced people with hosts.

There is some additional data concerning finance, education, etc but this is collated and aggregated data with no personal data.

Q - How is data security managed on the Ukraine project?

- R - We have strong processes in place for authentication and authorisation (including Multi Factor authentication). All requests for new data or changes to existing data are subjected to governance processes. We are currently using a standard Scottish Government system for sharing data which is already compliant with cyber and GDPR standards. As we develop new systems to speed up the matching process and reduce the time people spend in temporary accommodation, these are subjected to the same assessments to ensure the ongoing security of the data.

Q - Is there any risk to ‘group privacy’ / a risk to the Ukrainian community from how data could be used?

- R - This was not initially considered as the main priority was providing refuge and safety to very vulnerable people. It is something we need to consider again as we start to plan longer term integration.

Q - What level of anonymity (if any) was there in this project?

- R - When it comes to processing visa application, hosts and matching, none of the data is anonymised as we need to process each individual case with the personal data. All other data, including published statistics are aggregated to ensure anonymity.

Reflections on the ethical issues

Questions (Q) and Responses (R).

Q - Can we have definitions of Constitutional privacy, Group privacy, Information privacy, Algorithm governance, Contextual integrity, Contestation, Data minimisation, Informed consent, Precautionary principle?

- R - Ipsos: with help from Anuj, we have added all these definitions to the glossary (see pages 5-7).

Q - How does Anuj think the precautionary principle could be embedded in ALL data sharing projects?

- R - Anuj: When it comes to incorporating safeguards in data sharing projects, precautionary principle is not the only way forward. Other regulatory approaches may include a risk based approach where the project organiser(s) has to identify the risk and harm based approach.

None of these approaches may by themselves effectively balance the interests of the data subjects with the benefits of data sharing. Hence, recent research encourages the adoption of a nuanced approach that aims to align the uses of data with the needs and rights of the communities reflected in it. While selecting the regulatory framework, we must be guided by the nature of the data involved and its impact on the rights of data subjects. When it comes to data sharing, not all harms suffered on account of violation of privacy can be monetarily compensated, hence depending upon the sensitivity of data involved we need to proceed cautiously both on account of confidentiality involved as well as the inferences that can be drawn on the basis of such data. In order to embed effective safeguards in a data sharing project, it would be helpful to carry out a privacy impact assessment of the project and assess the nature of data involved, risks to privacy and other human rights.

Q - What does data minimisation mean in practice?

- R - In practice from an organisational perspective, data minimisation would require setting out of clear goals for which data is required, assessment of minimum data required to achieve those goals, development of protocols that restrict access to data and sharing of data, and placing time limit on storage of data. You may find this guide from the UK ICO helpful:

Broader issues

Questions (Q) and Responses (R).

Q - How do you at Ipsos handle our data as panellists? What information do you hold on us and where do you get it from? How were we chosen?

- R - Ipsos: great question! You were randomly selected to join this panel and this involved a few stages which we describe below. You might remember receiving an invite in the post from the Sortition Foundation – they specialise in this type of recruitment and you can read more about them on their website. They sent invitations to a random selection of addresses across Scotland – those addresses were taken from the Postal Address Finder (PAF) which is owned by Royal Mail. Organisations who want to use the PAF have to request and purchase it from Royal Mail. Your address was randomly selected to receive an invitation.

When you signed up for the panel you will have completed a form which asked questions about you (like your age, gender, ethnicity etc). This information was used by the Sortition Foundation to make sure that our final selected panel is broadly representative of the Scottish population. With your permission, this information along with your contact information, was passed along to us at Ipsos once you were confirmed as a panel member.

We have used this information to keep in touch with you about the panel. We have also collected further information from you (such as your bank details) to help us run the panel. As we record the sessions to make sure we capture everything, this means further personal information is collected (i.e. your voice is considered personally identifiable information).

All your personal data is held securely on our systems and is only accessible to a few team members. It is never passed to anyone outside of the Ipsos research team and is securely deleted from our systems once the project is complete. You can read more about how we use your personal data in the privacy notice and information sheet that you received in your welcome pack. We have published these on the online community as well so you can have a read any time. If you would like to receive another copy by email, please just get in touch!

Session four: principle forming

The speakers

None

Questions raised during session four

Questions (Q) and Responses (R). All responses provided by the Scottish Government.

Q - Can you request what data the Scottish Government holds on you?

- R - Under the UK Data Protection Act, each citizen has the right to see personal information organisations hold about them, request correction, deletion or restrictions on the use of their personal data: https://ico.org.uk/your-data-matters/your-right-to-get-copies-of-your-data/

Q - Are private sector organisations subject to all the same data rules as public sector ones?

- R - Yes they are.

Q - Is the data ever truly anonymous when being analysed by government? (thinking about the drugs and alcohol case study example which used health data, police data etc)

- R - Given enough time, it may be possible to re-identify individual people from an anonymised dataset. This is strictly prohibited by the civil service code of conduct and professional standards. The type of anonymised data and type of analysis applied on different projects would impact the probability of a person or a group of persons potentially becoming identifiable. This guide from the ICO provides lots of good and trustworthy information on anonymisation anonymisation-intro-and-first-chapter.pdf (ico.org.uk).

Contact

Email: michaela.omelkova@gov.scot

There is a problem

Thanks for your feedback