Child Poverty Practice Accelerator Fund (CPAF) round 1: reflections and lessons

This report provides learnings and reflections from the evaluation support offered during round one of the Child Poverty Practice Accelerator Fund (CPAF).

2. Lessons about providing evaluation support

Key learnings – what works when providing evaluation support?

- Adopting a collaborative approach to designing evaluation support tools maximises buy-in from partners and further supports upskilling in M&E.

- Providing flexibility in terms of timing of support and the support on offer is important as partners’ needs change as projects develop.

- Recognising and responding to the diversity of support needs means responding to the diverse types of CPAF project and the diverse types of people working to deliver them.

- Developing mechanisms for peer sharing of learning via a community of practice encourages a strong culture of learning and the sharing of best practice.

Urban Foresight, as the evaluation support partner, had two main roles: to develop an evaluation framework for the Fund and to engage with local leads and partners of the funded projects to support the set up and embedding of monitoring and evaluation practices.

This support was developed in three main ways:

- Developed the Evaluation Framework and an Evaluation Guide by adopting a collaborative approach with the CPAF partnerships and the Scottish Government.

- Designed and delivered ten upskilling workshops. Eight of these were held online and a further two were hybrid (in-person / online).

- Provided up to three hours of flexible one-to-one support per project, to be used at times that suited the partnerships.

This chapter explores these three steps in detail, explaining what was done and what was learned. This is particularly relevant in the current policy context where there is a growing focus on place-based approaches that are person-centred, and which seek to improve wider systems to better tackle child poverty. Overarching lessons that reflect on the support package as a whole end the chapter.

Additionally, by having multiple engagements with projects, Urban Foresight received feedback and thoughts on emerging lessons and encouraged and facilitated knowledge sharing between the CPAF projects. The following chapter includes reflections on this emerging learning.

Step 1: Collaboratively designing the evaluation framework

A collaborative approach was adopted in designing the Evaluation Framework and an associated Evaluation Guide. This section reflects on the process taken and lessons learned.

Why is an evaluation framework needed?

Developing an evaluation framework for innovative approaches and funds is important to ensure that lessons are gathered and reflected on and that impacts are captured as they emerge. It also ensures there is consistency in reporting across projects, but the framework nature allows flexibility.

What were the challenges to consider when designing an evaluation framework for CPAF?

Because the CPAF projects differ so much in focus, approach, and intended aims and outcomes, the CPAF Evaluation Framework had to be flexible and wide-reaching.

The Framework also had to be easy to understand and use by diverse users – from senior officers in local authorities to community engagement specialists in third sector organisations.

There was also an expectation that reporting requirements would not be too onerous for partners and that the framework could be used for future rounds of CPAF.

What was the approach taken to develop the CPAF evaluation framework?

A highly collaborative approach was utilised to design the framework, engaging the CPAF partners and the Scottish Government team. This ensured the framework was:

- user-friendly to a diverse user group, with differing levels of evaluation knowledge and skills;

- aligned with wider Scottish Government approaches to evaluation;

- able to work for current CPAF projects and open-enough to be used by other innovative approaches.

Collaborative approaches are also useful to maximise buy-in from delivery partners. More than this, the collaborative approach gave participants an insight into the process around developing an evaluation framework. It therefore supported the goal of upskilling partners about M&E.

How was the Evaluation Framework collaboratively developed?

First, the expectations and needs of the Framework, and the wider policy and M&E context in which CPAF sits were outlined, and agreed with Scottish Government. Then, a desk-based review of documents around child poverty and Scottish social policy evaluations was conducted in order to better understand the policy landscape and draw on useful learnings from other M&E frameworks. Two draft versions of the Framework were shared with the Scottish Government team at this stage.

Next, collaborative evaluation design workshops were held with the successful CPAF partnerships in December 2023 and January 2024. Here, the draft framework was presented, and participants were given the opportunity to comment on and further shape the Framework and the associated Evaluation Guide. Participants submitted feedback during and after the workshops. Feedback was incorporated to develop the finalised Evaluation Framework (Figure 1).

What did the Evaluation Guide consist of?

An Evaluation Guide was developed as a Word document (see Annex 3: CPAF Evaluation Framework) to provide information and guidance about how to use the framework and how to conduct M&E activities.

Responding to participants’ requests and preferences, it included:

- The overall evaluation framework (see Figure 1).

- Detailed information and guidance about each of the four overarching themes of the Framework.

- Guidance about how to develop an evaluation approach, considering what needs to be measured and types and methods of data collection.

- Monitoring form and insight reporting templates to be adapted by partners.

What was learned from designing the Evaluation Framework and Guide?

Diverse experiences and wide-ranging needs. The process of designing the Evaluation Framework and Guide brought attention to the variation in participants baseline understanding and experience of evaluation. Some had almost no previous experience, while others had been involved in evaluating child poverty interventions for over 20 years. This shows the importance of developing guidance that is wide ranging and of providing links to more detailed support.

Documents which can be easily edited and adapted. The collaborative approach to developing the Evaluation Framework and Guide also highlighted that respondents prefer editable Word or PowerPoint files where possible for receiving evaluation frameworks. CPAF participants appreciated being able to edit documents and quickly update them for their own usage.

How is the design and implementation going? |

How effectively is the project meeting its objectives? |

How well is partnership working going? |

What has the project team learned? |

|---|---|---|---|

How well does the project engage those with lived or professional experience? |

What is going well (strengths), not so well (barriers), and why? |

How have existing and new partnerships been used to develop the project? |

What has been learned and shared that adds to the knowledge base on child poverty? |

What skills, capacity and resources are needed to implement? |

What has had to change, how and why? |

Which partnerships have been important for achieving the project objectives? |

What has been learned and shared about the process for tackling child poverty? |

How can the work be built on when funding ends? |

How successfully is the project innovating? |

What are the wider impacts of partnership working? |

Should the project be scaled / replicated? If so, how? |

Step 2: Designing and delivering the upskilling workshops

The evaluation support package was also developed using a collaborative approach that met the needs of the CPAF partnerships. This section reflects on the process taken and lessons learned.

Why were workshops needed?

Upskilling workshops were organised to set-up and embed monitoring and evaluation practices across CPAF projects. The workshops aimed to build capacity and capability through providing training and opportunities for sharing of best practice.

How were the themes of the workshops decided?

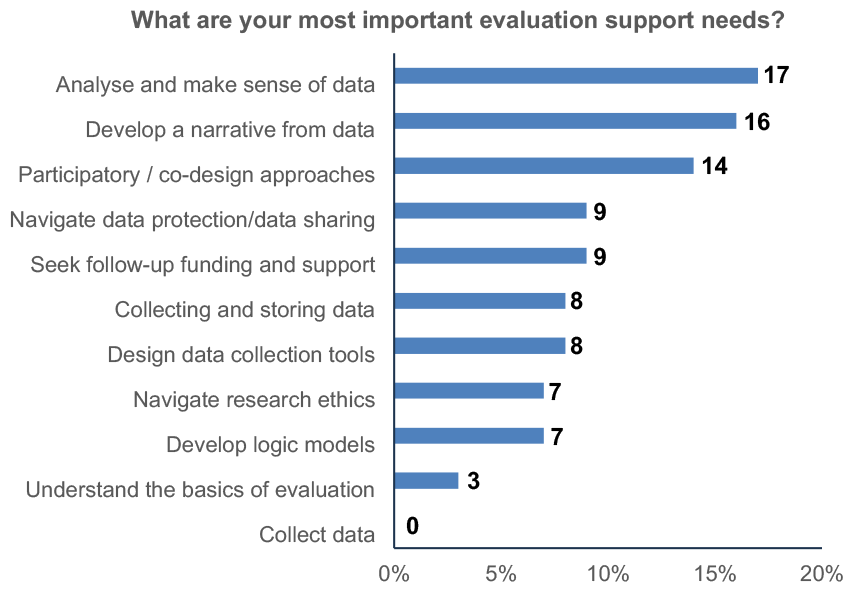

A simple evaluation support question was asked of CPAF participants in the initial stages of evaluation support (see Figure 2). Understanding of participants’ support needs were updated across the evaluation support period via short surveys and opportunities for feedback in workshops.

Feedback was gathered from the surveys to tailor the support offer to participants’ needs. The flexible and collaborative approach recognised that participants may not understand their weaknesses or support needs until they began core project activities. The first draft therefore included ‘to be confirmed sessions’ towards the end of the scheduled sessions.

In the initial poll, respondents identified data analysis, data presentation and using participatory or co-design approaches as major support needs (see Figure 2).

At the mid-point, feedback showed that data narratives and communication approaches emerged as important support needs. While communication is not traditionally seen as an evaluation activity, it was deemed as valuable to include so that partners could better and more impactfully communicate the findings of their evaluations.

Further, a commitment to flexibility ensured additional time was spent on these topics in the final sessions. Table 3 outlines the final workshop topics, dates and attendance numbers.

How were the workshops delivered?

Workshops were open to all projects. Participants came from local authorities, health boards, and third sector organisations. In total, 36 participants were engaged via the workshops.

All workshops were held online via Microsoft Teams, but two workshops were hybrid events, hosted from the Scottish Government offices in Atlantic Quay, Glasgow. Glasgow was chosen as it was the most accessible location for the Round 1 CPAF projects.

Workshops ran as follows:

- Workshops lasted two hours and all included a mid-point break, except for the in-person sessions that were half-day events.

- PowerPoint slides were circulated 48 hours in advance of sessions.

- Key discussion points were added to an online digital whiteboard with space for participants to leave other questions. For the most part, however, discussions were natural via voice and chat functions in Teams.

- All but the first workshop included extended space for peer learning and sharing. This responded to early feedback where participants noted that they valued listening to others and sharing best practice.

- Elements of the workshop where training was being delivered were recorded. To reduce data sharing risks, recordings were paused when participants engaged and shared their knowledge and experiences.

- Following the workshops, recordings, slides (including any updates) and additional resources (such as links to external support websites) were circulated via email. This meant that participants that became involved in CPAF projects after the initiation period in January 2024 were able to review past materials.

Title |

Themes |

Date |

Attendance |

|---|---|---|---|

Collaboratively designing the evaluation framework |

Developing a draft evaluation framework and understanding what evaluation support teams would like in future capacity-building workshops. |

13th December 2023 16th January 2024 |

8 (across the two sessions) |

Introduction to CPAF evaluation |

Getting to know one another and discussing the evaluation approach. |

14th February 2024 |

6 |

Co-design and participatory approaches |

Using co-design and participatory approaches. |

28th February 2024 |

12 |

Data storage, sharing, and protection |

Develop secure and ethical approaches for storing data + navigating data protection and sharing (with a brief intro to logic models and achievable outcomes). |

13th March 2024 |

5 |

Economic evaluations |

Understanding how to evaluate return on investments or value for money |

27th March 2024 |

3 |

Using systems methods |

Methods to evaluate the success of system approaches and partnership working. |

10th April 2024 |

11 |

Data analysis and narratives (in person) |

Analyse and develop a narrative from data |

23rd April 2024 |

5 (online) 8 (in-person) |

Communication and dissemination |

How to communicate and disseminate your key messages |

22nd May 2024 |

8 |

Next steps (in person) |

Seek follow-up funding and support Legacy building. |

19th June 2024 |

5 (online) 7 (in-person) |

Lessons learned |

Reflections and lessons to-date on project design and implementation, objectives, partnership working and the child poverty system. |

4th September 2024 |

14 |

What was the impact of these workshops for local projects?

After the final evaluation support workshop, a survey was circulated to all participants via email to understand how effectively the workshops had supported participants’ needs. Nine respondents provided feedback via this survey, representing seven projects. Additional feedback was gathered via the short workshop surveys as well as via comments made during workshops and to Urban Foresight and the Scottish Government in one-to-one meetings.

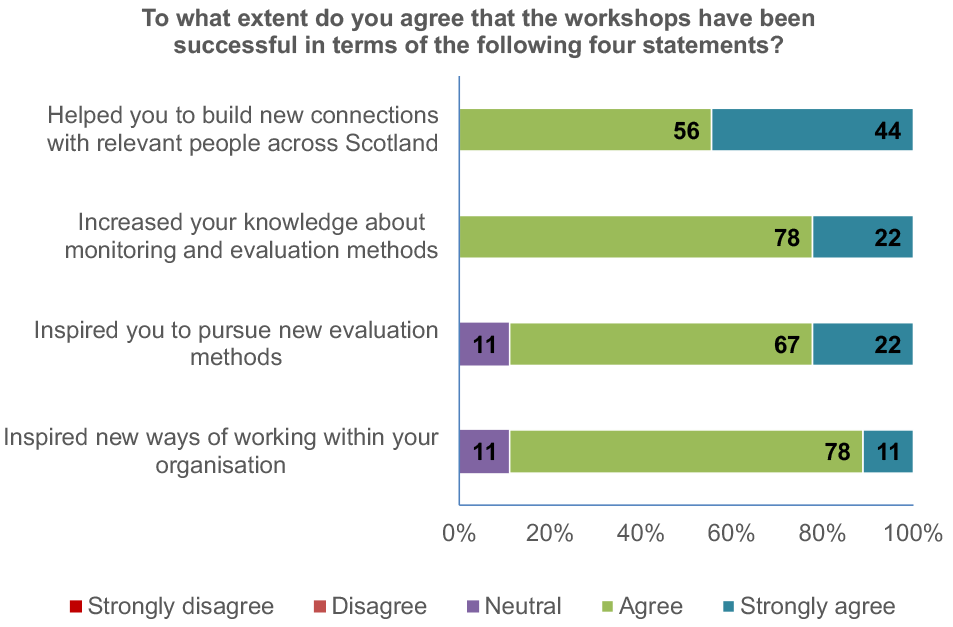

All respondents agreed or strongly agreed that the workshops increased their knowledge about monitoring and evaluation methods (Figure 3).

‘It has reminded me of the importance of thinking about evaluation from the outset; about the wide range of approaches that can be taken and about how to use learning on an ongoing basis.’

In some cases, the workshops facilitated a mindset shift about the value of evaluation. Various participants noted that the learnings gained from the evaluation support offer would likely influence their own approach to evaluation in the future, as well as that of their organisation.

‘Attending the workshops has really helped me to have a fuller understanding of how important evaluation can be in future decision making and to really analyse the effectiveness of a project or piece of work.’

‘The most important thing I've gained is to step back and consider what questions we most need to answer to support the shape of future work.’

Further, Figure 3 shows that all respondents agree that the workshops helped them build new connections and 89% felt the workshops had both inspired them to pursue new evaluation methods and inspired new ways of working within their organisation.

‘It's made me think about putting more time in to planning the evaluation and thinking about different methods.’

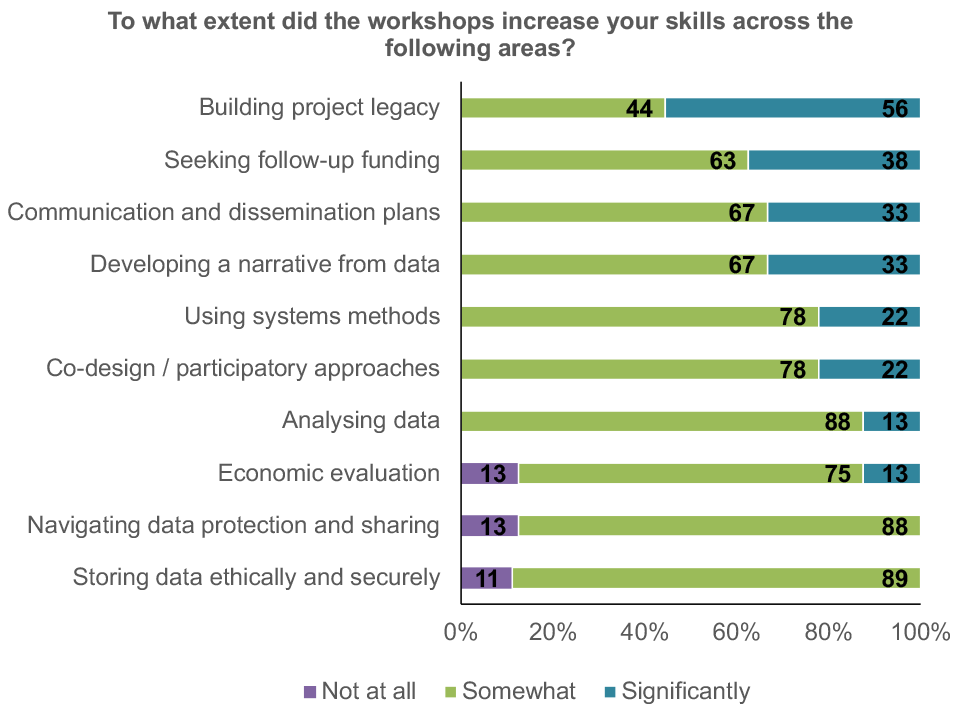

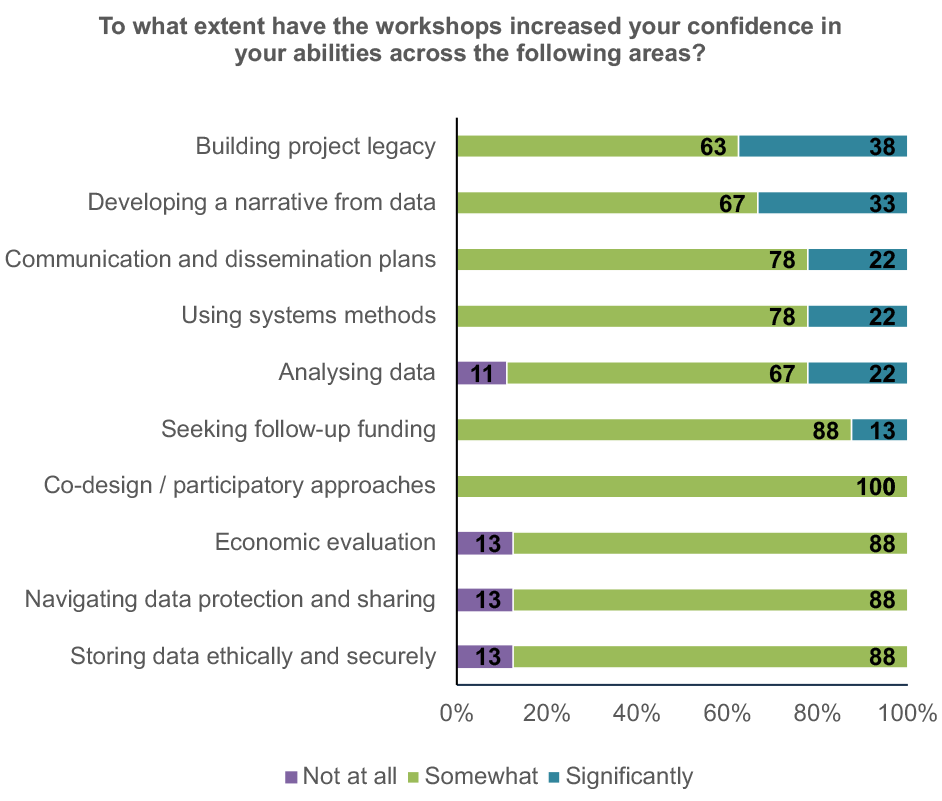

Workshops also increased participants’ M&E skills (Figure 4) and their confidence to apply their new skills (Figure 5). Figures 4 and 5 show that survey respondents greatly increased their skills and confidence in building project legacy, seeking follow-up funding, and developing communication plans and narratives from their data.

‘The trainings I did attend were really valuable and helped me upskill in regard to data analysis, using systems methods (which was extremely helpful) and also using narrative for evaluation.’

What was learned from organising evaluation support workshops?

Through organising the workshops, in a collaborative manner, there were learnings in terms of participants’ preferences regarding the most effective type and timing of support. It was found that participants prefer:

- receiving materials in advance of an evaluation support workshop;

- having access to multiple learning resources;

- being able to access resources (including recordings) after workshops;

- having both in-person and online options;

- having opportunities to learn from and share with their peers.

There is a preference for receiving materials in advance of a workshop. Participants noted that this:

- supports individuals with specific learning needs by allowing people to view the information at their own pace.

- allows multi-partner/multi-people teams to make informed decisions about who is best to attend.

- serves as a useful reminder about the session that can support attendance.

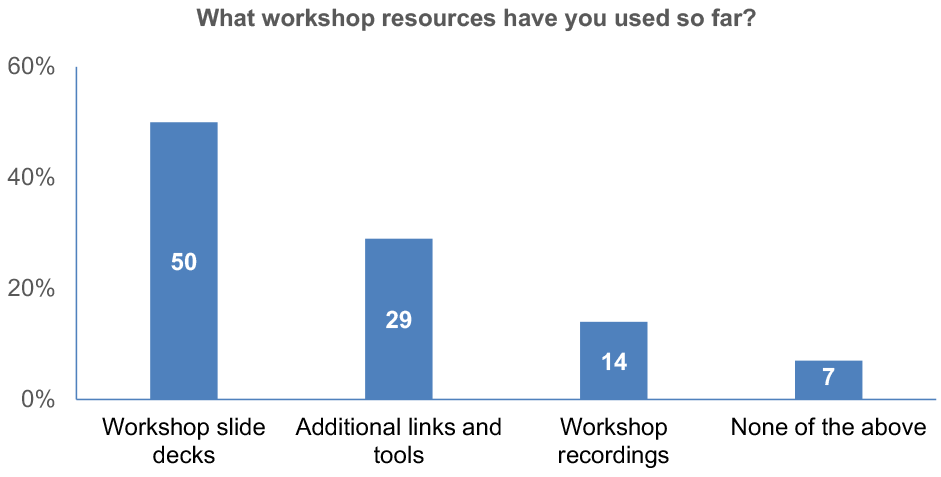

The provision of multiple learning resources is important. As illustrated in Figure 6, recordings, slides, and additional links were all accessed following sessions.

Participants noted that the value of multiple learning resources includes:

- Supporting people with different learning styles and preferences.

- Allowing those who are unable to attend live to receive a similar level of training.

- Giving people the opportunity to return to previous sessions at times that suit their projects.

‘I also really appreciated the recordings for the trainings that I was not able to make in person, and also to go over some of the learning previously.’

Participants appreciated having access to the resources following the sessions. This was useful for people that were unable to attend sessions due to leave or other meetings, as well as to ensure new team members could get up-to-speed with workshop topics that had already been delivered. This suggests that having a dedicated library of resources is likely to be useful for the longer-term to ensure good practice is not lost.

‘Thank you for the slides and recording from last week’s workshop. It was very informative and there was a lot to take in’

Access to resources after the sessions is also important given the limited time available for upskilling. While the two-hour workshops were effective in building participants’ capacity, it was not always enough time to embed new skills and knowledge.

‘I found the sessions worthwhile but like everything a couple of hours is never enough to embed new thinking or to upskill new knowledge. It’s like we have touched the surface but need the ongoing support to use the learning.’

Extending all workshops beyond two-hour sessions is likely to prevent participants’ ability to attend. However, offering longer sessions on the more technical topics such as data analysis or economic evaluations – areas where participants are less confident about their skills – may be beneficial for Round 2.

Participants voiced their preference for a combination of both online and in-person options for the evaluation support workshops. There is significant benefit of in-person activities, but online workshops are accessible to more people. It is therefore important that in-person workshops come with assurances of hybrid engagement for those unable to attend in person. Those that attended the in-person sessions particularly enjoyed the opportunities to network and collaborate in-person.

‘It was very helpful they were online, [… attending can be] quite a commitment on top of the day-to-day job.’

‘I was unable to attend in person therefore was able to join remotely which meant I did not miss out on any content, also I was able to include two other team members who are working on the project to attend [the online] workshops.’

Participants valued the opportunity to share best practice and to connect with and learn from other participants. This was understood to be akin to a community of practice-type setting. This demonstrates a keen desire for networking and sharing of best practice and highlights that future support should ensure space is built in for this from the outset.

"I have found working with Urban Foresight has helped build and strengthen the local partnership, it provided dedicated time for us to get to speak to each other and learn from other local authorities and projects."

Step 3: One-to-one evaluation support sessions

The final element of M&E support was the offer of flexible one-to-one support sessions. This section reflects on the process taken and lessons learned about providing ad hoc one-to-one support.

Why was one-to-one support offered?

Each partnership was offered three hours of flexible one-to-one support sessions. The aim of these sessions was to support local areas to build capacity around monitoring and evaluation through offering bespoke support.

How did projects use the one-to-one support?

There was significant flexibility in the one-to-one sessions in terms of both topics that could be discussed and when the support could be used. This was highly appreciated by the partnerships.

Of the estimated 27 hours of support, 11.5 hours were used by project leads. There are several reasons for the limited take up. For example, two project teams opted out of the one-to-one support due to shorter timelines and clear project boundaries. Two others procured external evaluators that provided dedicated support. Others felt that the collaborative workshops were sufficient and they did not have any additional evaluation support needs.

The one-to-one support was primarily used at the start of the evaluation support activity, between January and March 2024. However, there was also requests for support towards the end of evaluation support offer, between September and November 2024. Early sessions were used to support partners to think about their potential evaluation approaches, plans, and support needs. Later sessions were more focused on specific needs such as effective ways of communicating emerging findings.

Although not all hours were claimed, CPAF projects were regularly reminded about the offer during workshops and via email.

What was the impact of the one-to-one support for projects?

For projects that needed the additional one-to-one support, the sessions were viewed as a valuable opportunity to get more targeted support.

‘These were extremely beneficial to have a critical friend supporting our development of evaluation framework.’

The one-to-one sessions were designed to give projects opportunities to either obtain more detailed support on a general evaluation area or to receive highly tailored project-specific support.

For example, some projects asked for feedback on their evaluation approaches, tools, or logic models or asked for advice as a critical friend on planned work and activities. Others requested capability building on specific topics. Topics were wide ranging, including but not limited to:

- Leveraging systems mapping for evaluations.

- Collecting and understanding quantitative data (value for money and physical and mental health).

- Co-designing with community groups.

- Evaluating the process of joined up working.

- Feedback on evaluation approach to-date.

- Meaningfully sharing the results of the evaluation.

- Seeking support on how to communicate about the test and trial nature.

What was learned from providing one-to-one support?

Maintaining a flexible approach for one-to-one support is important. One-to-one sessions were most successful and effective when the project teams submitted topics for discussion and/or shared materials for review ahead of the session. However, it was important to ensure flexibility as there was value in more open discussions, as these conversations allowed for unknown issues to emerge and be addressed.

Ensuring evaluation support is available throughout the duration of projects. It is also important to reflect on the timings of requests to meet for one-to-one support. Most came either early in the process, or towards the end of the evaluation support offer. Early sessions tended not to have specific questions and were more general, while the later requests for support suggests that projects were not immediately aware of their evaluation support needs.

One-to-one sessions were useful to help plan support content for later workshops. For example, early sessions highlighted that many partnerships were unclear on how to collect data evidencing systems change. A systems methods workshop was then designed to aid learning in this area.

What was learned from delivering the evaluation support package?

The process of collaboratively designing the Framework and support, running one-to-one sessions and workshops, and receiving feedback from participants brought attention to four key learnings that are important to consider for the Round 2 CPAF evaluation support. These are discussed below.

Collaboration is vital

Collaborating with the projects throughout the duration of the M&E support was important to ensure the support remained useful and appropriate to changing needs and skills gaps. A collaborative approach should be used for Round 2.

The early commitment to collectively designing the Evaluation Framework and Guide was important to set a collaborative culture. Building in multiple opportunities for formal and informal feedback throughout the support ensured participants’ needs continued to shape the support as it developed.

Participants appreciate flexibility as support needs change over time

Participants greatly appreciated the flexible offer of support. This was useful given the diversity of projects in terms of timescales, types of participants, intended aims, approaches and methods.

Flexible support included flexibility in terms of topics, and in providing multiple ways to engage with the support: workshops, one-to-ones, and the access to resources.

Flexibility is also useful as participants’ needs changed over time. Indeed, at the outset, many projects were unsure about their support needs. This may be because participants became more aware of their own needs and limitations as they began project implementation. During the earlier design phase, some of the concepts were more abstract.

‘I also learned how important it is to be involved from the very early stages of designing the program/ project in order to gather meaningful data and tell a really powerful story of how the program or project has been effective in creating change and/ or transformation for the clients/ users of the program.’

Skills gaps and needs are diverse

Delivering the support brought attention to the diversity of evaluation support needs that depend on project needs, existing capacity and the types of participants that are involved in projects.

Because the Round 1 CPAF projects were so varied, the diversity of needs is to be expected. However, there are some broad points that are useful to consider when developing a programme of M&E support for Round 2 CPAF projects. In particular:

- Participants are less skilled in or confident in data storage and sharing, and in analysing qualitative and quantitative data. Effectively, these areas represent the more technical and specialist side of evaluation.

- Despite not being a requirement of CPAF’s evaluation, projects often sought additional support around quantitative economic evaluations of value for money or return on investment. Participants noted that their host organisations were facing internal and external financial pressures, and the need to evidence financial benefits was vital to obtain support for interventions beyond March 2025.

- Projects requested additional support on building legacy and communicating findings of the work. As noted, these are beyond traditional M&E concerns. That multiple projects raised them as support needs, however, highlights how many are facing barriers in sharing and communicating their work and successes with others.

- Projects also sought support for using systems thinking methods in their evaluation. Systems evaluation methods are still emerging methods which means that the corresponding skill set is not yet widely held.

Sharing learning in a community of practice has significant value

Participants valued the opportunity to feel part of a community of practice and share lessons and experiences with their peers just as much as the evaluation support. They also found value in knowing others face similar challenges.

‘I really enjoyed meeting others in person and sharing learning to build connections.’

Learning was shared across projects and from diverse types of participants. Those from third sector organisations, for example, tended to know more about co-design and collaborative approaches than those from local authorities.

‘Hearing from colleagues in other local authorities who have much more real-world engagement and experience working with residents than I do as an analyst.’

‘Explaining what we're doing to others outside of the council helps to shape our thinking and broaden our perspective.’

Contact

There is a problem

Thanks for your feedback