Post-school funding body landscape simplification: outline business case

Appraisal of three shortlisted options to simplify Scotland’s post-school funding body landscape. This Project aims to strengthen the foundations and build a flexible, agile and responsive post-school education, skills and research system to meet Scotland's needs.

3. Socio-Economic Case

3.1. Introduction

The purpose of the Socio-Economic case is to present the options that deliver best public value to society, including wider societal effects, and the economic appraisal of short-listed options to deliver the Project. The following sections outline the:

- Long-listed options and their appraisal,

- Detailed short-list appraisal, and

- Recommendation based on the analysis presented.

3.2. Long-listed options and appraisal

The purpose of long-list appraisal is to narrow down possible options to identify a short-list of viable options for detailed appraisal, that meet the requirement

of delivering the spending objectives and satisfy the critical success factors while taking into account the risks, constraints and dependencies set out in the Strategic Case.

3.2.1 Longlist options

Eight long-listed options with sub-options for different delivery methods were developed as a result of several workshops and engagement with policy areas that attempt to address the objectives and business needs set out in the Strategic Case. These are presented in Table 5 below.

Option 1 |

Business as usual. |

|

|---|---|---|

Options to solely move provision |

||

Option |

Provision |

Student Support |

Option 2 |

Movement of National Training Programmes (NTP) funding to SDS. |

No changes. |

Option 3 |

Movement of NTP funding and functions to a. SFC b. SAAS c. SDS |

No changes. |

Options to solely move student support |

||

Option |

Provision |

Student Support |

Option 4 |

No changes. |

All student support funding delivered through a. SFC b. SAAS c. SDS |

Options to move both provision and student support |

||

Option |

Provision |

Student Support |

Option 5 |

Movement of NTP funding to SDS. |

Movement of FE student support funding to SAAS. |

Option 6 |

Movement of NTP funding and functions to a. SFC b. SFC c. SFC d. SAAS e. SDS f. SDS g. SDS |

All student support funding delivered through a. SAAS b. SFC (SFB) c. SDS d. SAAS e. SAAS f. SFC g. SDS |

Option 7 |

Movement of all provision and functions to a. SAAS b. SDS c. SDS |

All student support funding delivered through a. SAAS (SFB) b. SAAS c. SDS (SFB) |

Options to create an entirely new funding body for provision and student support |

||

Option 8 |

A new single funding body. |

|

3.2.3. Critical success factors

To assess options using a consistent method, a set of critical success factors (CSF) closely related to those proposed by HM Treasury were identified. This is a key part of the standard methodology employed for evaluating long-list options and provides a useful framework to be able to score options against each other. The CSFs are outlined below.

Strategic fit and meeting business needs - How well the option meets the agreed spending objectives, related business needs and service requirements, how well the option provides holistic fit and synergy with other strategies.

In the context of this project, this means:

- the option must support the achievement of strategic priorities and spending objectives as outlined in the SOC, such as the Purpose & Principles, the Public Service Reform, NSET.

- the option provides holistic fit and synergy with other SG strategies and programmes.

- the option must comply with SG policy, for example on the establishment of new public bodies.

- transparency, fairness and accessibility of provision is incorporated through the core of the programme.

- structures, governance, operating frameworks, guidance and standards delivered through the option deliver efficient, impactful services.

- the option results in a more agile and responsive system that is accountable, trusted to deliver and subject to effective governance.

Potential value for money - How well the option optimises social value (social, economic and environmental), in terms of the potential costs, benefits and risks.

In the context of this project, this means how well the option:

- recognises the value and basis of the system used for the distribution of investment in learners.

- contributes to a competitive and inclusive economy, and the wellbeing economy.

- makes the best use of public funds, so removes unnecessary duplication and overlap in the structure of the bodies to ensure that resources and capacity are aligned to priorities, whilst optimising social value in terms of potential costs, benefits, efficiencies and risks therefore taking care that adverse impacts are minimised.

- how well the option promotes best value across the delivery of funding by improving quality, efficiency and effectiveness.

Supplier capacity and capability, operational fit - How well the option matches the ability of potential suppliers to deliver the required services and appeals to the supply side.

In the context of the SOC, this means how well the option:

- provides necessary staff and systems/IT skills and capabilities to deliver the funding body functions and activities.

- provides holistic operational fit and synergy with operations, processes and components of the funding landscape.

Potential affordability - How well the option can be financed from available funds, balance of investment against the improved outcomes aligns with resourcing constraints.

In the context of this project, this means:

- whether the option is affordable, noting budget pressures.

Potential achievability - How well the option is likely to be delivered given an organisation's ability to respond to the changes required, how well the option matches the level of available skills required for successful delivery?

In the context of this project, this means:

- it must be possible to deliver the option from a legal perspective – is there going to be a need for legislative change, if so what is the scale of change required and is it reasonable.

- consideration of timescales set by Ministers.

- the extent to which there is appropriate resourcing, and relevant Project management with the capacity and capability to deliver the transformation.

- Whether there is the possibility of creating the right leadership and delivery culture to secure the benefits of reform.

3.2.3 Long-list Options Assessment

As per the key principles from the HMT Green Book Guidance, options in the long-list are to be discounted if they fail to deliver the spending objectives and CSFs for the Project; appear unlikely to deliver sufficient benefits, considering the intention is to deliver Value for Money; are clearly impractical/unfeasible; or an option is clearly inferior to another, because it has greater costs and lower benefits; if they violate constraints, such as are clearly unaffordable or too risky; or if options are sufficiently similar to allow a single representative option to be selected for detailed analysis.

SG policy officials and analysts collectively assessed all long-list options against the spending objectives and CSFs. Each option was rated as “red”, “amber”, or “green”, expressing the delivery structure not satisfying, partially satisfying, or fully satisfying the appropriate appraisal criteria. During the assessment further consideration of constraints, dependencies, unmonetised and unquantifiable factors as well as unintended consequences was taken into account, based on currently available evidence.

Legal Considerations

In development and consideration of the long list of options, legal considerations, primarily focussed on the legislative requirements to facilitate delivery of the options, were explored with SGLD colleagues. These considerations were scored as part of the Critical Success Factor 5 - Potential achievability. Other factors contributing to CSF 5 include resourcing constraints.

A further consideration was the risk of ONS reclassification that could result if changes to the post-school funding body landscape are deemed to enhance the degree of control that Ministers have on universities.

While any option could technically be achieved through changes to primary legislation, not all are advisable. Therefore, it was agreed that any option that had been deemed inadvisable based on initial legal advice[36] was rated red; options that would require changes to primary legislation in order to be achievable but were advised to be feasible following initial legal advice, were rated amber; and options that would not require any changes to primary legislation and were practicable to pursue were rated green.

The outcome of the long-list assessment can be found below in Table 6. More detailed description of the assessment can be found in Annex C.

Option |

1. To simplify operational responsibility across the post-school funding landscape. |

2. To reduce costs and increase efficiencies in operation of system. |

3. To improve availability and quality of data collection. |

4. To enable targeted and equitable distribution of funding to support the learner. |

1. Strategic fit and meeting business needs |

2. Potential value for money |

3. Supplier capacity and capability; operational fit |

4. Potential affordability |

5. Potential achievability |

|

|---|---|---|---|---|---|---|---|---|---|---|

1 |

Business as usual |

R |

R |

R |

R |

N |

N |

N |

N |

N |

2 |

Movement of NTP funding only to SDS from SFC No changes to student support funding arrangements |

R |

R |

A |

R |

R |

A |

G |

G |

R |

3a |

Movement of NTP funding and staff to SFC |

A |

A |

A |

A |

A |

G |

A |

A |

A |

3b |

Movement of NTP funding and staff to SAAS |

R |

R |

R |

R |

R |

R |

A |

A |

R |

3c |

Movement of NTP funding and staff to SDS |

R |

R |

R |

R |

R |

A |

G |

A |

R |

4a |

All student support funding delivered through SFC. No changes to NTP funding or wider provision arrangements |

R |

R |

A |

A |

R |

R |

A |

A |

A |

4b |

All student support funding delivered through SAAS. No changes to NTP funding or wider provision arrangements |

A |

A |

A |

A |

A |

G |

G |

A |

A |

4c |

All student support funding delivered through SDS. No changes to NTP funding or wider provision arrangements |

R |

R |

R |

A |

R |

R |

R |

A |

R |

5 |

Movement of NTP funding only to SDS from SFC and movement of FE student support funding (staff moves to be reviewed at technical group stage) from SFC to SAAS |

A |

A |

A |

A |

A |

G |

G |

A |

R |

6a |

Movement of NTP funding and staff to SFC and all student support funding delivered through SAAS |

G |

G |

G |

G |

G |

G |

A |

A |

A |

6b |

Movement of NTP funding and staff to SFC and all student support funding delivered through SFC - i.e. single funding body built on SFC systems and structures |

G |

G |

G |

G |

G |

A |

A |

A |

A |

6c |

Movement of NTP funding and staff to SFC and all student support funding delivered through SDS |

A |

A |

G |

G |

A |

R |

R |

A |

R |

6d |

Movement of NTP funding and staff to SAAS and all student support funding delivered through SAAS |

A |

A |

A |

A |

A |

R |

A |

A |

R |

6e |

Movement of NTP funding and staff to SDS and all student support funding delivered through SAAS |

A |

A |

A |

A |

A |

A |

G |

A |

R |

6f |

Movement of NTP funding and staff to SDS and all student support funding delivered through SFC |

R |

R |

A |

A |

R |

R |

A |

A |

R |

6g |

Movement of NTP funding and staff to SDS and all student support funding delivered through SDS |

R |

R |

A |

A |

R |

R |

A |

A |

R |

7a |

Movement of all provision and staff to SAAS and all student support funding delivered through SAAS - i.e. a single funding body built on SAAS systems and structures |

A |

G |

G |

G |

A |

A |

A |

A |

R |

7b |

Movement of all provision and staff to SDS and all student support funding delivered through SAAS |

A |

G |

A |

G |

A |

A |

A |

A |

R |

7c |

Movement of all provision and staff to SDS and all student support funding delivered through SDS - e.g. a single funding body built on SDS systems and structures |

A |

G |

G |

G |

A |

A |

A |

A |

R |

8 |

A new single funding body |

G |

G |

G |

G |

A |

A |

A |

A |

A |

3.3. Short-listed options

As per the HMT Green Book Guidance, all shortlisted options must be viable and meet the requirement of delivering the objectives. The shortlist must include Business as Usual, a realistic and achievable ‘do minimum’ that meets essential requirements, the preferred way forward if different to ‘do minimum’, and any other options that have been carried forward.

The short-list options carried forward for detailed appraisal are presented in Table 7.

Business as usual |

1. Business as usual. |

Preferred way forward; Do minimum |

2. Movement of NTP funding and functions to SFC and all student support funding delivered through SAAS. |

More ambitious |

3. Movement of NTP funding and functions to SFC and all student support funding delivered through SFC. |

Options besides BAU which sufficiently satisfy the assessment criteria are movement of NTP funding and functions to SFC and all student support funding delivered through SAAS (previously Option 6a, now Option 2), assessed as the preferred way forward and Movement of NTP funding and functions to SFC and All student support funding delivered through SFC (previously Option 6b, now Option 3), assessed as the more ambitious way forward.

Although several of the options in the longlist at least partially meet the objectives and strategic ambitions for the Project, they either do not fulfil the totality of the core scope of the Project, leaving the landscape fragmented and/or are more challenging from legal and/or value for money perspectives. The short-listed options, are sufficiently similar to these options by either combining some of these options or offering a similar solution. They are also more straightforward from a legislative perspective and more favourable in value for money terms.

More detailed descriptions of shortlisted options are as follows:

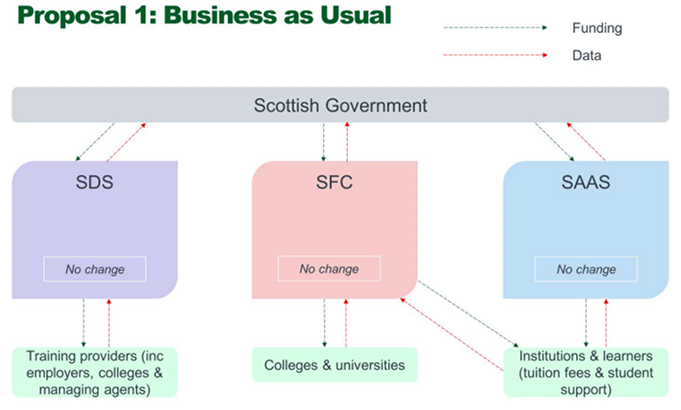

Option 1: Business as usual

Post-school education and skills funding would continue to be delivered as now through the three public bodies (i.e. SFC, SAAS and SDS).

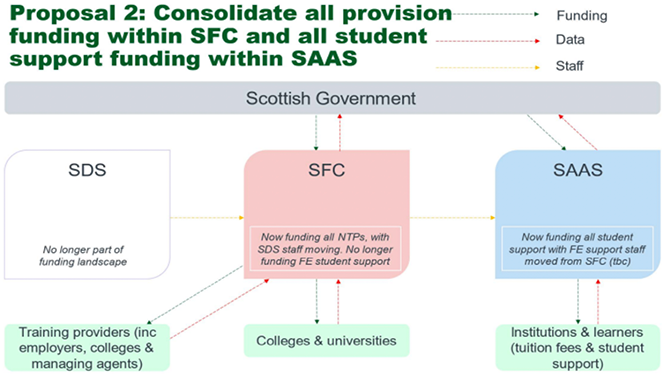

Option 2: Consolidate all provision funding within one public body (SFC) and all student support funding within one public body (SAAS)

This means:

- moving National Training Programmes (including provision for apprenticeships) funding and functions from SDS to SFC; and

- moving FE student support funding and functions from SFC to SAAS so that all student support funding is delivered through SAAS.

Option 2 is likely to require the Further and Higher Education (Scotland) Act 2005[37] to be amended to enable all provision, including apprenticeships, to be funded by one public body (i.e. SFC) and all student support to be funded by one body (i.e. SAAS).

NTP funding, data, systems and staff needed to support delivery would be transferred from SDS to SFC. This would result in a single public body with responsibility for all types of provision.

In Option 2, SFC would transfer responsibility and funding for administering FE student support funding to SAAS. Details of funding, staffing and system changes will be worked through with technical groups that the Scottish Government will convene, including with HR, trade unions and groups of staff most likely to be impacted by reform.

Research would remain with SFC. This would enable the link between research and learning and teaching, highlighted as being of importance during development of the Purpose and Principles, to be maintained.

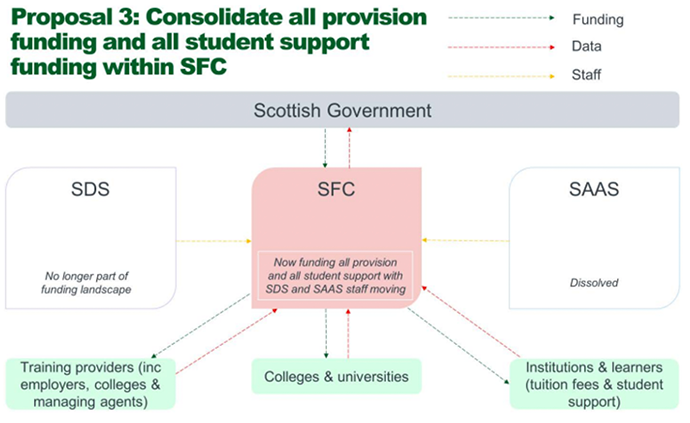

Option 3: Consolidate all provision funding and all student support funding within one public body (SFC)

This means:

- moving National Training Programmes (including provision for apprenticeships) and funding and functions from SDS to SFC; and

- moving SAAS student support funding and functions to SFC.

Option 3 would see all provision funding and all student support funding delivered by a single body (i.e. SFC). As with Option 2, this is likely to require amendment to the Further and Higher Education (Scotland) Act 2005. This would result in National Training Programmes funding, systems, data and staff being transferred from SDS to SFC. This would give the newly established body responsibility for all types of provision as well as full responsibility for all FE and HE student support with funding and potentially some staff moving from SAAS to support this change. This would result in the dissolution of SAAS as a separate entity, with student support functions and resources being merged into SFC. This would enable the maintenance of the ONS classification of universities by ensuring that funding flows through a NDPB at arm's length from Ministers.

Research would remain with SFC. This would enable the link between research and learning and teaching, highlighted as being of importance during development of the Purpose and Principles, to be maintained.

3.4. Shortlist appraisal

3.4.1. Chosen approach to short-list appraisal

As the Strategic Case has highlighted, this project is ultimately about structural change, where the benefits relate to creating conditions to improve the system, and are therefore not easily quantifiable or monetisable. This means that cost-benefit analysis (CBA) is not suitable for appraisal.

In such circumstances, the alternative approach recommended by the Green Book is to use cost-effectiveness analysis (CEA). This approach requires an effectiveness measure which was derived from a Multi-Criteria Decision Analysis (MCDA). The use of MCDA also provides Ministers with additional information on how the outcomes vary by options and incorporates stakeholder views as set out below.

An HMT Green Book approved method, MCDA assesses the performance of options against set criteria, taking into account the different viewpoints and perspectives of key stakeholders. It is used widely across the public sector in appraisals that do not rely predominantly on monetary valuations and facilitates wider engagement in the process. MCDA is a well-established technique that helps decision-makers make choices between alternative options when these are required to achieve multiple specific objectives. It is particularly effective when there is a mix of qualitative and quantitative criteria not directly comparable against each other and when the perspectives of multiple stakeholders may need to be considered, as is the case here. It is important to repeat that MCDA is strictly an analysis of non-monetisable impacts.

3.4.2. MCDA methodology

Criteria

The criteria for the MCDA workshop were developed with input from policy leads across the Scottish Government’s Lifelong Learning and Skills Directorate and with input from economists, analysts and other colleagues in Government with experience of applying MCDA approaches.

The criteria sets are closely aligned to the business needs identified in the Strategic Case, which flow form the Purpose and Principles and were designed to test the deliverability of the options and the extent to which the options would meet the business needs.

The criteria against which these options were assessed, are set out in tables 8-12 below.

Criteria |

Description |

|---|---|

1. Simplify and clarify responsibility for governance and assurance of all aspects of the post-school system, allowing for action to be taken when standards are not met. |

To what extent does the option deliver simple and clear responsibility for funding all forms of provision and all forms of student support (eliminating overlapping responsibility), providing Scottish Government and the Scottish Parliament with clear system-wide evidence of effective and efficient organisational performance and delivery? |

Criteria |

Description |

|---|---|

2. Improve operational efficiencies within the post-school funding landscape. |

To what extent does the option deliver non-monetisable operational efficiencies, such as, but not limited to enhanced knowledge sharing, reduction in number of back-office systems and services that have to be procured and maintained? |

3. Enable efficiencies within the funding recipient landscape. |

To what extent does the option enable overheads to be reduced across the system e.g., Is it likely to be easier and less resource intensive for a college, university or training provider to navigate and satisfy reporting requirements? |

Criteria |

Description |

|---|---|

4. Enable best use of existing data. |

To what extent does the option create transparency in data systems and improve access to existing data? |

5. Enable improvement of quality, comparability and fitness for purpose of future data collection. |

To what extent does the option enable collection of consistent, comparable, timely data fit for purpose, to enable accurate reporting on system level outcomes, e.g., widening access, completion rates across all forms of provision, and an improved evidence base for policy making? |

Criteria |

Description |

|---|---|

6. Enable more targeted, equitable and sustainable distribution of student support funding. |

To what extent does the option create the conditions that will enable different funding approaches to be considered, analysed and implemented, making student support more targeted so that it has maximum impact, is equitable and sustainable? |

7. Enable agile and responsive funding models across all forms of provision. |

To what extent does the option create the conditions that will enable different funding models, analysis and approaches to achieving parity of esteem in provision e.g., opportunity to flex funding in-year in support of FE/Apprenticeships/HE/upskilling/reskilling provision? To what extent does the option enable a flexible and adaptable funding system, particularly in relation to its responsiveness to social and economic priorities? |

Criteria |

Description |

|---|---|

8. Deliverability of options. |

How well can this option be delivered by the Scottish Government and existing public bodies within suitable timescales? How easily, quickly and efficiently can the option be delivered considering the scale of change? |

9. Deliverability of outcomes. |

How well is the option likely to deliver on its desired purpose? |

10. Disruption. |

Which option is least likely to cause disruption to delivery of funding across the system, including to staff in scope of transferring via TUPE/COSOP arrangements; changes to staff processes and responsibilities; transferring and changing data systems; and policy processes as a result of the proposed option? |

Ranking of options

An MCDA workshop with internal and external stakeholders was held on 5th September 2024 to rank the options against key criteria. Participants[38] included policy officials from the Scottish Government, participants from the three funding bodies (SDS, SFC and SAAS) as well as participants from colleges, universities and Independent Training Providers who are members of the Scottish Training Federation.

Participants were invited based on their knowledge and expertise of a range of different elements of the funding body landscape, both from a delivery and customer perspective and were invited to share their views and experience, rather than their organisations’ position in discussion of the options and criteria.

Participants were asked to consider each criterion in turn and to rank the three options based on their ability to meet it, relative to the other 2 options (including Business as Usual). If an option ranked 1st, then it was considered the best option for meeting that criterion. If an option ranked 3rd, then it was considered the worst option for meeting that criterion. Options could also be ranked equally if the participant was not able to make a distinction between two or more options for a specific criterion.

Box 1: SDS participation in the MCDA workshop

Participants from SDS had questions and reservations about the application of MCDA as an approach and the process to develop the criteria, and did not feel there had been adequate preparation or explanation of what would be required at the workshop to be able to fully participate.

SDS registered this view in writing the day before the session and during the workshop itself. While SDS participants did participate in the discussion, they did not feel able to make an informed judgement on the options based on the criteria. The following form of words was included in all responses and each option was ranked equally against each criterion.

“I find it difficult to rank the 3 options presented without a greater understanding of the complexity and strengths of the current delivery model, and the future target operating model for the change options.

The lack of robust data and evidence and discussion and information provided today does not allow me to complete this process at this stage in an informed manner.

On that basis I have scored all three options as rank 3 (worst) at this time.”

In line with best practice, these responses have been included in the analysis. Their inclusion/exclusion does not impact the overall results.

Weighting

Weighting is applied across criteria when ranking options to account for their relative importance. An equal weighting was applied in the main analysis to each of the five criteria sets and each criterion within them to allow these to be considered equally important.

Separate sensitivity analysis was undertaken to examine the extent to which the weighting affects the overall results. The results for this can be found in section 3.4.5.

3.4.3 MCDA summary results

Table 13 below shows the summary results of the MCDA workshop for each option by criteria set.

Criteria Set |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

1 |

Simplicity and Accountability |

3 |

1 |

2 |

2 |

Operational Efficiency |

3 |

1 |

1 |

3 |

Data Quality, Availability and Comparability |

3 |

2 |

1 |

4 |

Equity and Agility |

3 |

2 |

1 |

5 |

Implementation |

1 |

1 |

3 |

Sum of ranks |

13 |

7 |

8 |

|

Overall result |

3 |

1 |

2 |

|

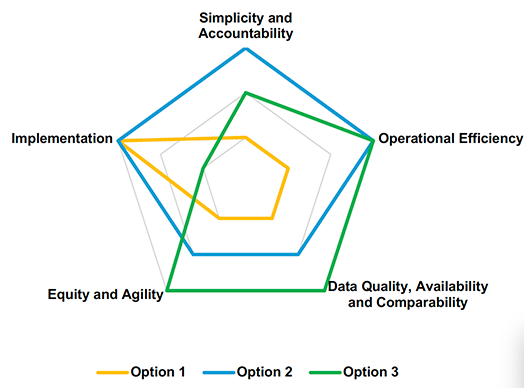

The below radar diagram (Figure 2) provides a visual representation of how each option ranked against the different criteria sets. The closer to the edge, the higher the ranking. For example, Option 3 ranked 1st in “Equity and Agility”, whereas Option 1 ranked 3rd.

The overall workshop feedback highlighted clear agreement that the current system (i.e. Option 1 – Business as Usual) was not sustainable and that change is needed. However, feedback was also clear on the importance of implementing the change well, following best practice and retaining required skills while ensuring minimal disruption and keeping the learner, as well as employers, at the forefront.

In terms of the Option that best met the criteria, workshop feedback varied. Option 2 was seen as most likely to remove complexity and easier and less disruptive to deliver as opposed to Option 3.

However, many participants argued that Option 3 would ultimately lead to marginally greater efficiencies and would enable better data collection and comparability as well as better equity outcomes.

The primary concern about Option 3 related to SAAS functions in administering student loans and whether the loan book could transfer to a non-departmental public body (NDPB). There was also concern that this Option would lead to more complexity, given that many of the bodies involved in student loans, including His Majesty’s Revenue and Customs (HMRC), HM Treasury and the Student Loans Company (SLC) are out of scope for this project.

While the overall scores show Option 2 ranking the first, the more detailed results outlined below illustrate the close nature of the scores between Option 2 and 3. In fact, many participants said there was, at most, a marginal difference between these options in terms of benefits.

3.4.4 MCDA detailed results

This section provides a more detailed description of the feedback received in the workshop on each criteria set.

Criteria |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

1 |

Simplify and clarify responsibility for governance and assurance of all aspects of the post-school system, allowing for action to be taken when standards are not met. |

3 |

1 |

2 |

Workshop feedback highlighted that there is broad agreement that the current system (i.e. Option 1 – BAU) duplicates roles and responsibilities and is overly complex and confusing for stakeholders and learners.

A large proportion of the participants considered Option 3 to be the best option in theory to deliver simplicity and accountability across the post-school system and enable uniformity of standards. However, significant concern was expressed about how the loan book[39] and related activities could be managed in a NDPB if SAAS functions were to be moved to one.

Similarly, it was highlighted that there are multiple organisations outside of the scope of this Project involved in higher education (HE) student support and particularly in administering student loans, including Student Loans Company (SLC) and HMRC, and therefore some level of complexity would remain in any scenario.

This complexity around HE student support was ultimately the reason why Option 2 was ranked higher than Option 3. Option 2 was considered to deliver clear lines of accountability through each body having clear and separate remits – one on student support and the other on provision funding – helping organisations and individuals understand who is responsible for specific functions.

A smaller number of participants expressed some concern in the workshop about the ability of Options 2 and 3 to retain the valuable knowledge, skills and experience in delivering apprenticeships by SDS.

Some participants also noted that, regardless of the option chosen, including tuition fees in student support rather than provision adds complexity, as tuition fees are paid to institutions, much like teaching grants, while student support is paid directly to students. Therefore, it was suggested separating these two should be considered.

Criteria |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

2 |

Improve operational efficiencies within the post-school funding landscape. |

3 |

2 |

1 |

3 |

Enable efficiencies within the funding recipient landscape. |

3 |

1 |

2 |

Looking at Criterion 2, the majority of participants agreed that if delivered well, Option 3 is the most likely to bring operational efficiencies longer term through economies of scale and reduction in back-office systems.

However, many also agreed that the difference between Option 2 and 3 in terms of operational efficiency is marginal and will be dependent on whether there is still going to be a need for several different systems and services when merged. It was highlighted that efficiencies will be delivered over time and to achieve these, high quality change management, including in corporate culture, and significant lead in time will be required for the transformation.

For Criterion 3, there was broad agreement that the current system involved duplication in terms of reporting requirements as well as partnership working arrangements, particularly for colleges. However, there was no clear agreement amongst participants on whether Option 2 or 3 would meet the criterion best. Those who felt Option 3 best met the criterion highlighted the importance of having to establish relationships with only one organisation and the ease this would bring in terms of reporting requirements. At the moment, recipient bodies have to deal with multiple organisations, each with different rules, regulations, procedures, timelines, reporting requirements etc., which puts a strain on their limited resources.

Those who felt that Option 2 better met the criterion referred to potential added complexity if SAAS functions were moved to a NDPB, as this could create new overheads in the administration and recovery of student loans. It was noted that while efficiencies are enabled, they are limited by the number of different delivery methods, but further efficiencies could be unlocked through the wider reform programme.

Criteria |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

4 |

Enable best use of existing data. |

3 |

2 |

1 |

5 |

Enable improvement of quality, comparability and fitness for purpose of future data collection. |

3 |

2 |

1 |

Participants generally highlighted lack of data comparability and linkage as an issue in the current landscape, therefore rating Option 1 as the worst performing in this criteria set.

Workshop feedback for both data criteria favoured Option 3 as it was seen as the most likely option to create conditions for improvements in data sharing and consistency in new data collection, allowing for a better linkage of data and therefore a better understanding on where funding is spent and the outcomes it brings – not only on Scottish Government spend but also from the perspective of universities, colleges and other providers. During the workshop discussion however, it was raised that to deliver successful change, a clear plan for data improvements and collection is required.

However, some felt the benefits of Option 3 over Option 2 were marginal given the systems are very different and having highlighted concerns around the feasibility of Option 3 in relation to data exchange with the Student Loans Company.

Other participants felt there were no specific issues in relation to either criterion or that structural change was not necessary to achieve these, although it was acknowledged that improvements across the system in relation to consistency would be beneficial.

It was noted that delivery of Options 2 or 3 will have an impact on the state of data in the short-term and potentially increase reporting requirements. There was a suggestion that Option 2 could be used as a stepping stone to Option 3 through initially assessing existing data and data streams through two funding bodies, then in the medium to long-term establishing one funding body, which would enable streamlined processes for the collection of data that is fit for purpose.

Criteria |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

6 |

Enable more targeted, equitable and sustainable distribution of student support funding. |

3 |

2 |

1 |

7 |

Enable agile and responsive funding models across all forms of provision. |

3 |

2 |

1 |

Workshop feedback highlighted broad agreement that the current system is not responsive enough and does not address issues with equity sufficiently.

Based on responses, Option 3 ranks higher than Option 2 for both criteria, although the difference is small. Those respondents that favoured Option 3 highlighted how this option would enable a holistic approach to funding to be taken, allowing for funding to be more targeted and agile, although many also noted that the structural change on its own will not achieve this.

Those favouring Option 2 highlighted the differences between student support and provision funding, with two distinct bodies allowing them to have more focussed missions. Some also felt that an Executive Agency allows for better agility than a NDPB in relation to student support, due to its governance structures and therefore creates better conditions for responsive policymaking.

A minority of feedback was concerned about the ‘one size fits all’ approach of Options 2 and 3 and that while, in theory, these options could achieve higher equity and agility, the risks associated particularly with the different delivery models for funding would make this too challenging.

Criteria |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

8 |

Deliverability of options. |

1 |

2 |

3 |

9 |

Deliverability of outcomes. |

3 |

1 |

1 |

10 |

Disruption. |

1 |

2 |

3 |

Feedback for this criteria set, while varied, focussed on similar themes. It was broadly agreed that Business as Usual would cause the least disruption and be the cheapest and easiest to deliver in short-term, as there would be no need for change associated with this option, while Option 3 would hold the most risk and challenges and would be the most expensive to deliver.

However, the majority of feedback clearly expressed that in the long-term, change is required as the current system is not sustainable and could cause disruption to providers in the future, meaning it would be unlikely to deliver on desired outcomes. It was also noted that disruption can be managed with sufficient lead in times and high-quality management of change.

There was no clear view, however, on which option would lead to better outcomes, with Option 2 and 3 tied in rankings. Those favouring Option 3 felt that while this option would be the hardest to deliver and involves most risk, it would ultimately be the most likely to deliver on outcomes, if implemented well.

Those favouring Option 2 felt that it was a more realistic option for delivering outcomes, particularly in relation to removing complexity. Some responses suggested a phased approach may work best, with elements of Option 2 delivered now as a stepping stone to a longer-term aim of a single funding body.

3.4.5 MCDA sensitivity analysis

Sensitivity analysis examines how changes in different factors can impact the ranking of options and is therefore an integral part of undertaking a robust MCDA. This section outlines the three different types of sensitivity analysis that were undertaken to understand how robust the overall results are.

Monte Carlo sensitivity analysis on weightings

To test how sensitive the results are to different weightings, Monte Carlo analysis was undertaken. Monte Carlo analysis involves a large number of combinations of weightings being simulated to determine the probability of the main result changing under different scenarios. As part of this, a random uniform distribution is used to select weighting parameters, ensuring that the sum of weights is equal to 1, with a minimum weight set at 5% for any one Criteria Set. This then results in each weight having a non-uniform distribution, and typically a weight of between 5% and 35%. These combinations of randomly selected weightings are then applied in turn to determine which option ranks best under each parameter combination.

An additional layer of sensitivity was also applied to the simulations through an exclusion of a small number of participants’ contributions in the ranking exercise. In practice, this meant that each simulation included rankings from 15 out of the 19 participants. The sample of 15 participants was randomly selected for each simulation – all participants are treated equally in this sensitivity analysis.

The Monte Carlo analysis shows that Option 2 is preferred in 62% and Option 3 in 38% of the 10,000 simulations undertaken, with Option 1 winning only in rare occasions, i.e., in less than 1% of the simulations.

More detailed interpretation of the results reveals that Option 3 is generally preferred when the combined weight of Criteria Sets 3 (Data) and 4 (Equity and Agility) is greater than the combined weight of Criteria Sets 1 (Simplicity and Accountability) and 5 (Implementation). For Option 2, the opposite is true.

If the additional layer of sensitivity around participant exclusion is removed – i.e. when the model only considers sensitivity to different weightings – Option 2 is preferred 78% of the time.

Sensitivity analysis by organisational groupings

While the participants were invited to take part in the MCDA workshop based on their individual expertise rather than as representatives of their respective organisations, separate sensitivity analysis was also conducted to identify and account for any potential organisational or sectoral level bias.

This was done by splitting the workshop results into different organisational groupings to see if the overall results differed depending on the organisational make-up. Four groupings were selected. These were ‘Funding bodies only’, ‘Funding bodies and Scottish Government’, ‘Other organisations only’, and ‘all organisations apart from Scottish Government’.

The findings show that the overall result is maintained in each of the four tests, with Option 2 the preferred option, followed by Option 3. However, in two of the tests – ‘funding bodies only’ and ‘all organisations apart from Scottish Government’ – Option 2 wins by stronger margin (by 2 points instead of 1) over Option 3, suggesting that participants from Scottish Government show slightly stronger preference for Option 3 than participants from other organisational groupings.

Sensitivity analysis of MCDA ranking assessment method

Finally, separate sensitivity tests were done to understand the impact on the overall results of changing the method used for assessing participant rankings. An example of how the main results outlined in Section 3.4.4 were arrived at is shown below:

Step 1 – For each option, each participants’ ranks were summed for each criterion. For example, the sum of participants’ rankings by option for Criteria Set 2 – Operational Efficiency – are presented in table 19 below.

Criteria set 2 – operational efficiency |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

2 |

Improve operational efficiencies within the post-school funding landscape. |

46 |

30 |

23 |

3 |

Enable efficiencies within the funding recipient landscape. |

44 |

29 |

31 |

Step 2 – These totals were then ranked as set out in Table 20 below, with highest sum receiving a rank of 3 (worst) and lowest sum receiving a rank of 1 (best). For criterion 2, Option 1 ranks third while Option 3 ranks first and is therefore preferred. For criterion 3, Option 1 remains third while Option 2 ranks first.

Criteria set 2 – operational efficiency |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

2 |

Improve operational efficiencies within the post-school funding landscape. |

3 |

2 |

1 |

3 |

Enable efficiencies within the funding recipient landscape. |

3 |

1 |

2 |

Step 3 – These criterion level ranks set out in Table 20 were then summed up within the Criteria Set 2 as set out in table 21 below, with all criteria within a Criteria Set weighted equally, and ranked again from 1 (best) to 3 (worst) based on the methodology set out above. In the case of Criteria set 2, given the sum of ranks for both Options 2 and 3 equals 3, these options therefore rank equally.

Criteria set 2 – operational efficiency |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|---|---|---|---|

Sum of criterion ranks |

6 |

3 |

3 |

Criteria set rank |

3 |

1 |

1 |

Step 4 – The criteria set ranks were then added up, with each Criteria Set having an equal weighting, and ranked as set out in the main results (Section 3.4.3, Table 13), with Option 2 the preferred option, above Option 3 and with Option 1 ranking last.

In the sensitivity test, an alternative approach to assessing the main results was used. This approach summed up participant rankings on a Criteria Set level rather than at an individual criterion level as set out below in Table 22, therefore skipping step 2 from the method set out above and instead summing the scores from Table 19 and ranking these sums with highest sum ranking third (worst) and lowest sum ranking first (best).

Criteria set 2 – operational efficiency |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|---|---|---|---|

Sum of participant ranks |

90 |

59 |

54 |

Criteria set rank |

3 |

2 |

1 |

Table 23 below sets out how the Criteria Set level ranks and the overall result change when the method of assessment is changed. It shows that the results are highly sensitive to the chosen method, with Option 3 ranking best followed by Option 2, but that while the places between these two options are switched, the difference between them remains marginal.

Criteria Set |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

1 |

Simplicity and Accountability |

3 |

1 |

2 |

2 |

Operational Efficiency |

3 |

2 |

1 |

3 |

Data Quality, Availability and Comparability |

3 |

2 |

1 |

4 |

Equity and Agility |

3 |

2 |

1 |

5 |

Implementation |

1 |

2 |

3 |

Sum of ranks |

13 |

9 |

8 |

|

Overall result |

3 |

2 |

1 |

|

3.4.6. Economic appraisal of costs

This section outlines the results of the economic appraisal of the costs for each option.

Method

The economic appraisal conducted follows HMT’s Green Book methodology and principles, with annual nominal costs summed and discounted (using the Green Book Social Time Preference Rate of 3.5%) to give a Present Value of Costs (PVC). These costs were derived from the Financial Case and therefore adopt all the same assumptions.

A 9-year time horizon[40] was used from the point the first transition related spending is expected to be incurred for Options 2 and 3 (FY 2025/26). Costs include those associated with both the transition to and running of the option and, in accordance with standard methodology, are presented in real terms (2025/26 prices).

As set out in Section 3.4.1, this project is primarily about enabling change in the future. Therefore, no comprehensive attempt has been made to estimate the monetary value of benefits for each of the options. The MCDA results above provide some indication of non-monetisable advantages and disadvantages of the options.

The financial modelling, which is set out in more detail in the Financial Case, has also not modelled any efficiencies into the costings for each of the options over the appraisal period. This is because it is unclear at this stage where in the system efficiencies could be realised, or when these might materialise. These are likely to be identified as detailed design work towards a new target operating model across all public bodies is commenced and will likely relate to economies of scale through shared systems and services.

It is also likely that efficiencies and benefits that are likely to be realised as a result of this reform would deliver savings over a longer period than the 9-year time horizon included in this analysis. Further modelling of this will be undertaken during the detailed design phase on the basis of an agreed delivery option.

Results

The costs are broken down to three categories: staff related costs, non-staff related costs and transitional costs. The first two categories capture the costs associated with running of the post-school education funding body landscape. Transitional costs cover the costs associated with transitioning from Business as Usual to Options 2 and 3. Due to considerable uncertainty with the cost estimates, both high and low estimates are presented. More detail on how these costs were arrived at can be found in the Financial Case. The total PVC for each of the options by cost category are shown in Table 24 below.

Present Value of Costs (£ million) |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|---|---|---|---|

Staff related costs |

|||

Low |

274.1 |

276.0 |

278.1 |

High |

300.4 |

302.3 |

304.4 |

Non-staff related costs |

|||

Low |

85.7 |

86.4 |

87.3 |

High |

94.7 |

97.3 |

99.8 |

Transitional costs |

|||

Low |

0.0 |

2.5 |

3.9 |

High |

0.0 |

4.5 |

6.9 |

Total |

|||

Low |

359.7 |

364.9 |

369.2 |

High |

395.0 |

404.1 |

411.1 |

As a considerable proportion of costs for Options 2 and 3 would, similarly, be incurred in Option 1, it is helpful to observe exclusively the additional costs expected to be incurred by Options 2 and 3 relative to Option 1. This is set out in Table 25 below.

Present Value of Costs (£ million) |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|---|---|---|---|

Staff costs |

|||

Low |

0.0 |

1.9 |

4.0 |

High |

0.0 |

1.9 |

4.0 |

Non-staff costs |

|||

Low |

0.0 |

0.8 |

1.6 |

High |

0.0 |

2.6 |

5.1 |

Transitional costs |

|||

Low |

0.0 |

2.5 |

3.9 |

High |

0.0 |

4.5 |

6.9 |

Total |

|||

Low |

0.0 |

5.2 |

9.5 |

High |

0.0 |

9.1 |

16.1 |

In PVC terms, Option 2 will incur additional costs relative to Option 1 in the region of £5.2 million to £9.1 million over the 9-year appraisal period. For Option 3, this additional cost is between £9.5 million to £16.1 million in PVC terms. The difference between Options 2 and 3 is primarily driven by the higher number of staff and systems being transferred in Option 3.

3.4.7 Sensitivity analysis of cost appraisal

To further account for uncertainty in the cost estimates, particularly in relation to transition costs as well as running costs for Options 2 and 3 in the later years of the appraisal period, sensitivity analysis was undertaken to understand the impact of changing specific values on the Present Value of Costs. Three scenarios were considered. These are set out below.

Scenario 1 – assume 10% increase to year-on-year staff costs for those in scope of transferring to a new organisation from 2026/27

There is some level of uncertainty over staff costs for both Options 2 and 3 in relation to both assumed staff numbers for future years as well as how pay harmonisation will impact costs. Therefore, a 10% increase in the “high” estimate of year-on-year staff costs is applied to those in scope to transfer to a new organisation from 2026/27 onwards to see how this impacts total PVC for both options.

Scenario 2 – assume 100% increase to transition costs over 2025/26 to 2026/27

As set out in the Financial Case, transition costs for each Option are based on costs set out in similar structural reform projects as these cannot be currently estimated with a high level of certainty for this specific project. Similarly, no assumptions have been made about any potential data or IT system transformation programmes as a result of this change. Therefore, it is likely that the transition costs could be significantly underestimated. A 100% increase to the “high” estimate of transition costs is applied to illustrate the impact of higher transition costs on the PVC.

Scenario 3 – combine scenarios 1 and 2

This scenario combines the above 2 scenarios to understand how the PVC changes if both scenarios were to happen.

Sensitivity analysis results

Table 26 sets out the change in the range of total additional PVC for Option 2 relative to Option 1, in each of the three scenarios, using the same HMT Green Book methodology as set out in the sections above.

Total Present Value of Costs (£ million) |

Scenario 1 |

Scenario 2 |

Scenario 3 |

|---|---|---|---|

Low |

5.2 |

5.2 |

5.2 |

High |

14.9 |

13.6 |

19.5 |

Increasing the staff and/or transitional costs in Option 2 increases the high estimate of total additional Present Value of Costs relative to Option 1 from £9.1 million to between £13.6 million and £19.5 million depending on the scenario.

Table 27 sets out the change in the range of total additional PVC for Option 3 relative to Option 1, in each of the three scenarios, using the same HMT Green Book methodology as set out in the sections above.

Total Present Value of Costs (£ million) |

Scenario 1 |

Scenario 2 |

Scenario 3 |

|---|---|---|---|

Low |

9.5 |

9.5 |

9.5 |

High |

30.8 |

23.0 |

37.7 |

Increasing the staff and/or transitional costs in Option 3 increases the high estimate of total additional Present Value of Costs relative to Option 1 from £16.1 million to between £23.0 million and £37.7 million depending on the scenario.

3.4.8 Cost-effectiveness analysis

This section combines the MCDA and cost analysis to provide a formal cost-effectiveness analysis (CEA), the alternative method proposed in the HMT Green Book if Cost-Benefit Analysis is not possible.

Method

Cost-effectiveness analysis is a form of economic analysis that compares the relative costs and outcomes of different options with a specific “effectiveness measure” representing the outcomes. Here, the overall scores of the MCDA form the effectiveness measure (or outcome). This allows us to conduct CEA to compare the results from the MCDA and cost appraisal more comprehensively in line with HMT Green Book Guidance.

Cost-effectiveness analysis presents a ratio of costs to effectiveness – in this case, the total PVC figures shown in table 24 in Section 3.4.6 are divided by the MCDA score (i.e. sum of ranks) for each option to arrive at the effectiveness ratio, with the lowest ratio being the most “cost-effective”.

In order to create the cost-effectiveness ratio, the MCDA results need to be inverted – e.g. if an option is ranked 3rd, then it is considered the best option for meeting the criteria, while if the option is ranked 1st, it is considered the worst option for meeting the criteria. This has no impact on the main results set out in Table 13 in section 3.4.3, with Option 2 still ranking best and Option 1 worst.

Table 28 below shows the inverted MCDA results.

Criteria Set |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|

|---|---|---|---|---|

1 |

Simplicity and Accountability |

1 |

3 |

2 |

2 |

Operational Efficiency |

1 |

3 |

3 |

3 |

Data Quality, Availability and Comparability |

1 |

2 |

3 |

4 |

Equity and Agility |

1 |

2 |

3 |

5 |

Implementation |

3 |

3 |

1 |

Sum of ranks |

7 |

13 |

12 |

|

Overall result |

1 |

3 |

2 |

|

Results

Table 29 shows the PVC range, MCDA score as well as the cost-effectiveness ratio for each of the options.

Cost-effectiveness analysis results |

Option 1. BAU |

Option 2. Two funding bodies |

Option 3. One funding body |

|---|---|---|---|

PVC range (low-high) (£ million) |

359.7 – 395.0 |

364.9 – 404.1 |

369.2 – 411.1 |

Sum of ranks |

7 |

13 |

12 |

Cost-effectiveness ratio (low-high) (£ million) |

51.4 – 56.4 |

28.1 – 31.1 |

30.8 – 34.3 |

The results align with the findings of the MCDA analysis, showing that Option 2 has the lowest ratio, therefore suggesting it is the most “cost-effective” option. This is followed closely by Option 3, with Option 1 having the highest ratio by a considerable margin, therefore being the least “cost-effective” option. The cost-effectiveness ratio ranges for Options 2 and 3 are partially overlapping, demonstrating how close the two options are, consistent with the MCDA and sensitivity analysis findings.

Sensitivity analysis

Given the closeness of the results between Options 2 and 3, several sensitivity tests were conducted to see how sensitive the CEA results are to changing factors such as weightings and costs. This was mainly done in the form of simple “tipping point” analysis – i.e. how much do the weightings or costs need to change for the result to change.

Looking first at changing the weightings, the results are broadly consistent with the Monte Carlo analysis set out in section 3.4.5, in that the CEA results are highly sensitive to different MCDA Criteria Set weightings and that there are very few, if any, weightings scenarios where Option 1 becomes most “cost-effective”.

For example, increasing the weighting of Criteria Set 5 (Implementation) to 80%, therefore leaving 5% weighting for each of the other Criteria sets, would still lead to Option 2 marginally ranking first over Option 1, and therefore being the most “cost effective” option.

However, the more weight given to Criteria Set 5, the less cost-effective Option 3 becomes, with it typically ranking last in scenarios where Criteria Set 5 is assigned a weighting of more than 45%, with Option 1 ranking second behind Option 2.

Finally, the likelihood of Option 3 becoming the most “cost-effective” option over Option 2 typically increases the higher the assigned weighting for Criteria Sets 3 (Data) and 4 (Equity and agility) are in comparison to Criteria Sets 1 (Simplicity and accountability) and 5.

Looking at changing the Present Value of Costs, the analysis suggested that the overall PVC for Option 2 would have to increase by more than 80% and by more than 65% for Option 3, for Option 1 to become the most “cost-effective” option. Even if the assigned weighting for Criteria Set 5 was set at 80%, Option 2 costs would still need to increase by minimum of around 10% for Option 1 to become the most “cost-effective”.

The consistency of these CEA sensitivity analysis results with the main results of the MCDA and Monte Carlo analysis, which also removes a randomly selected set of individuals from each simulation to account for bias in groupings of individuals, is down to the differences in the costs and PVC between each option being much smaller in relative terms than the differences in the MCDA scores. This means that the CEA results are more sensitive to changes in the MCDA results than changes in the costs.

3.5 Conclusion

The MCDA results show clear agreement among stakeholders that Business as Usual (Option 1 – BAU) is not sustainable and that there is a clear case for change, with this option ranking last by a considerable margin. Option 2 ranks first, followed closely by Option 3, with sensitivity analysis suggesting minor changes in factors such as weightings or method of assessment changing which of the two options ranks first.

Further investigation of the results highlights some key differences between the performance of Options 2 and 3 across the different criteria sets. As the Monte Carlo analysis suggests, Option 3 generally performs better in relation to improvements in data collection and equity outcomes as well as general responsiveness of the system. However, some of the MCDA responses also highlight a significant risk for Option 3 in relation to the management of the Loan Book and the associated stakeholder relationships, with the potential for further added complexity, as well as disruption in trying to address these complexities.

The results suggests that Option 2 would be more straightforward to implement, without adding new complexities or further disruption to key functions. It was also noted that, while Option 2 would still deliver benefits relating to data, equity and agility, these may be marginally greater in Option 3, due to all functions being within a single body.

The cost appraisal shows clearer differences between the three options, with Option 1 having the lowest present value of costs, followed by Option 2, with Option 3 having the highest costs. However, cost-effectiveness analysis, which combines the results of the MCDA with the cost analysis, reveals that despite Option 1 having the lowest costs, it is still the least “cost-effective”. This analysis also further demonstrates there are only marginal differences between Options 2 and 3.

Given that the analysis points to no obvious “winner”, the final decision may then rest on risk appetite in relation to both tolerable levels of disruption and additional spend balanced with the level of importance placed on specific outcomes, with Cost-Effectiveness analysis showing Option 2 as the most “cost effective” by a small margin, when assuming that all outcomes are assigned equal importance.

In particular, consideration should be given to the extent and nature of the potential disruption associated with Option 3, and whether it could lead to issues with student loan provision that could negatively impact students. Similarly, it is useful to consider whether the potential for marginally enhanced delivery of some outcomes from Option 3 should be given more weight, and therefore be sufficient to outweigh the additional associated costs and the complexity of implementing such a large-scale change.

Contact

Email: postschoolreform@gov.scot

There is a problem

Thanks for your feedback