Health - redesign of urgent care: evaluation - technical report

Technical report to support the redesign of urgent care evaluation main report.

3 Methodology

The findings set out in the Redesign of Urgent Care (RUC) Evaluation Main report, and in the Full findings’ sections of this Technical report, were derived from four key data collection and analysis processes, namely:

- A survey of people who had tried to access the RUC pathway by calling NHS 24, but ended the call before speaking to anyone (Discontinued Caller survey)

- A survey of patients who had accessed the RUC pathway via NHS 24 (Patient survey)

- Online focus groups with NHS staff working across the urgent care pathway

- Interrupted Time Series Analysis incorporating available Urgent Care delivery metrics

For these pieces of work, an ethics review and a Data Protection Impact Assessment were undertaken and monitored throughout the evaluation process by the Scottish Government. For the Patient survey and the Interrupted Time Series Analysis, additional approvals were sought. For the Patient survey, an application to the NHS Health and Social Care Public Benefit and Privacy Panel (PBPP) was submitted and approved in order for a sample of eligible patients who had contacted NHS 24 111 to be compiled. For the Interrupted Time Series Analysis to be conducted, access to secondary data was required. A data sharing request was submitted to and granted by Public Health Scotland.

A Research Advisory Group (RAG) was also established to support the evaluation. Via meetings and correspondence, the RAG provided advice and quality assurance on matters including:

- Project inception, overview of the work and methodology

- Review and feedback on interim findings from the Discontinued Caller survey, focus groups with staff and initial findings from the Interrupted Time Series Analysis

- Review and feedback on provisional findings from the Patient survey and further findings from the Interrupted Time Series Analysis

- The final Main, Summary and Technical reports

3.1 Research questions

The research questions for each of these four strands are set out below:

3.1.1 Discontinued Caller Survey

- What are patients’ experience of NHS 24 call waiting times before discontinuing their call?

- Why do patients decide to end the call before it is answered?

- What actions did patients take after they took the decision to end the call?

- If no action was taken, what was the reason for this and how did it impact their health?

- What are patients overall experience of the RUC pathway?

3.1.2 Patient Survey

- What are patients’ experiences of the RUC pathway?

- What went well and what could be developed further?

- How do patients’ rate their overall experience of the RUC pathway?

- How do patients’ experiences of RUC vary, if at all, between different demographic groups?

- Do patients’ experiences of the RUC pathway differ depending on the number of ‘stop points’ (i.e., service types accessed) in their pathway?

- How do patients’ experiences of RUC vary, if at all, depending on NHS 24 outcome/disposition?

3.1.3 Focus groups with NHS staff

- What do staff understand the purpose of the RUC pathway to be?

- How have the changes to unscheduled care affected practice and workforce experiences?

- What are staff perspectives on what has worked well and what challenges remain?

3.1.4 Interrupted Time Series Analysis

- What is the decision that the RUC evaluation will inform?

- What are the relevant intermediate and final outcomes (i.e. performance criteria) for the RUC evaluation?

- What are the current gaps in routinely collected data to inform an economic evaluation and how can these gaps be eliminated?

3.2 Discontinued Caller survey

To gather the views of people who tried to access the RUC pathway by calling NHS 24 111, but who ended the call before speaking to anyone, an online survey was conducted via the panel provider Norstat, between 29th February and 20th March 2024. The questions used in the survey were developed by researchers at Picker to understand why patients decided to end their NHS 24 call before it was answered and what actions they took after ending the call. The panel members were screened for their eligibility to take part. The eligibility criteria included that they lived in Scotland and had discontinued a call to NHS 24 within the previous 6 months (either calling for themselves or on behalf of another adult or a child aged two years or over). Respondents that met the eligibility criteria were informed about the purpose of the survey and were asked for their consent to take part before being asked the questions.

Out of the 1675 people that were passed into the survey (i.e. confirmed they lived in Scotland), 466 discontinued a call to NHS 24 within the last 6 months. Of these, 420 called NHS 24 for themselves, for a child aged between 2-16 years or for another adult. Of these 420 respondents, 418 consented to participate in the survey. Some respondents were removed by Norstat following quality control checks. These included checking on the quality of the responses to the open ended question, straight lining (when respondents give identical, or nearly identical, answers to items) and speeding. A total of 387 responses were obtained.

3.2.1 Approach to analysis

Norstat, who conducted the survey of their panel members, provided Picker with frequency tables for all questions and the raw data. Picker carried out additional analyses using the statistical software package IBM SPSS to compare findings by subgroups. A number of derived variables were also created that grouped some of the responses into fewer categories.

Subgroup comparisons, such as a breakdown of responses to a question by time of call, were carried out using column proportion tests (pairwise comparisons with Bonferroni adjustments applied to significance values to account for multiple comparisons). Analysis of response data, including by subgroups, was undertaken. Subsequent results tables, and an explanation of how to interpret them are outlined in section 6. The intention was to also provide insights into any differences in reported experiences by demographic subgroups but poor quality self-reported demographic data (i.e., where respondents answered the demographic questions about themselves rather than the person needing care) reduced the sample size which affected the ability to present reliable subgroup estimates.

3.3 Patient Survey

To understand the experience of patients who have contacted NHS 24 and accessed the RUC pathway, a postal survey was conducted with the option for recipients to complete the survey online. The questionnaire was developed using, where possible, well-tested items from existing surveys such as from the Care Quality Commission’s Urgent and Emergency Care Survey[3] and NHS 111 surveys[4]. New items were developed in line with best practices in questionnaire design, to evaluate patient experiences of the RUC pathway. Due to the requirements of the timetable and budget, cognitive testing of the questionnaire with patients was not feasible. However, feedback on the content of the questionnaire was sought from two patient and public involvement contributors who had recently contacted NHS 24 and accessed the RUC pathway. Following their review of the questionnaire, changes were made to the wording and/or response options at some questions, to improve question comprehension. The draft questionnaire was shared with the Research Advisory Group (RAG) for review, and subsequently some amendments were made. The final Patient survey questionnaire included 48 questions, which focussed on people’s experiences of calling NHS 24 and any other urgent care services they accessed before or after their call.

Following an approved application to the NHS Health and Social Care Public Benefit and Privacy Panel (PBPP), NHS 24 compiled a sample of eligible patients that had contacted NHS 24 between 7th-21st April 2024 and had selected the RUC pathway via the NHS 24 Interactive Voice Response (IVR) options (i.e. option people select if they thought they needed to go to A&E). Due to the lower volume of calls from people living in the Island Boards, a slightly longer sampling period was taken for this cohort (25th March – 21st April 2024).

The sampling inclusion criteria were:

- All patients (aged 16 and above on the day of the call) who contacted NHS 24 and selected the A&E(RUC) pathway, regardless of whether they made the call themselves or the call was made on behalf of them

- All parents / carers who contacted NHS 24 (A&E/RUC pathway) in the sampling period on behalf of a patient aged 2-16 years

The exclusion criteria were:

- Patients aged under 2 years (as the RUC pathway does not apply to this age group)

- Patients that had died after their call (removed following a check via NHS Central Register)

- Patients with an end-of-life code (to minimise the risk of contacting a recently bereaved family)

- Patients flagged for Public Protection

- Patients who called to obtain contraception (e.g. the morning after pill). Patients who suffered a miscarriage or another form of abortive pregnancy outcome (this includes women who had an Ectopic pregnancy and where possible, women who had a concealed pregnancy)

- Patients who called regarding feelings of suicide/attempted suicide

- Patients who called regarding sexual health concerns

- Patients without a UK postal address

- Patients without a registered health board code (i.e. those with ‘# not assigned’ in this field)

- Duplicate callers (only the most recent call episode retained)

To ensure the sample was nationally representative, a stratified systematic sample was taken. The sample was stratified by Health Board (with a minimum sample size set per Board), then a systematic sample was taken from an age-gender sorted list to ensure representativeness by age and gender. Before the survey was launched (and before each reminder mailing), NHS Central Register (NHSCR) carried out checks for any deceased patients so that they could be removed from the sample.

The questionnaire was mailed to a sample of 3,497 individuals. The survey was in field between 22nd May and 12th July 2024, during which time recipients were able to take part via the paper survey mailed to them, online or over the phone. Up to two reminders were mailed to non-respondents to maximise response rates. A survey helpdesk was provided during the fieldwork that included a freephone telephone number and an email address to enable recipients to opt out, complete the questionnaire over the phone (which included access to Language Line, although this service was not requested by participants) or to have any queries answered. Overall, 662 of 3215 eligible people[5] responded to the survey, representing a response rate of 21%. The response rate was lower than expected (21% rather than the expected 30%), so the maximum margin of error[6] is slightly larger than anticipated (+/-4% rather than +/-3%) meaning the reliability of the estimates are lower than expected.

3.3.1 Approach to analysis

The response data from the completed paper questionnaires was combined with the online response data. At the outset of analysis, the response data was cleaned, including removing responses to questions that should have been skipped by the respondent and values that were out of range. The following records were also removed as part of the cleaning process: respondents who did not wish their online responses to be used unless they fully completed the survey and respondents who answered less than 5 questions in either the paper or online version.

Derived variables were created to allow for the required subgroup analysis to be undertaken, such as by date/time of call categories.

As the sampling methodology involved unequal probabilities of selection to ensure a minimum number of sampled patients from each board, this overrepresented smaller Health Boards. Selection weighting was applied to the achieved sample to correct for unequal selection probabilities across Health Boards, so that the achieved sample[7] proportions for each Health Board aligned with the full sample proportions. To balance for nonresponse bias, a nonresponse weight was developed (using age and gender) and applied to the achieved sample.

Frequency table data was generated for each survey question showing counts and percentages for each response option. A breakdown of the frequency data by a number of factors was produced (e.g., waiting time for NHS 24 111 call to be answered, day/time of call and caller type) for subgroup analysis. It was not possible to undertake analysis by demographic groups (e.g., age) as some callers to NHS 24 111 were calling on behalf of someone else (i.e., a child or another adult).

A comparative report in excel was produced to show differences in caller experiences for the 17 experience-based questions. Responses to questions that measure patient experience and are of an evaluative nature are assigned a positive score which is used in the reporting. ‘Routing’ questions used to guide respondents past any questions that may not be relevant to them are not scored (e.g. ‘Was NHS 24 111 the first service you contacted for help?’). Similarly, questions used for descriptive or information purposes (e.g. ‘At the end of the call, what action or advice did you receive from NHS 24 111?’) are not scored either. The positive score is the percentage of respondents that gave a positive response(s). Non-specific responses, such as ‘Don’t know/Can’t remember or ‘Not sure’ are not included in the scoring. Positive scores were used to allow comparisons between subgroups. Significance testing (using the Z-test) was performed to see if any differences between the groups were statistically significant. Where results were compared by subgroups for the experience-based (scored) questions in some charts, included in the Main report, a significantly higher percentage (score) was shown in green font and a significantly lower percentage (score) was shown in red font.

Where the unweighted base size per question, or within any sub-group breakdown is less than 11, the data are suppressed and replaced with an asterisk (*).

3.4 Focus Groups with NHS staff

Three focus groups were conducted during March and April 2024 with staff working across the urgent care pathway:

- Focus Group 1: staff from NHS 24 and Flow Navigation Centres

- Focus Group 2: staff in managerial roles working across the urgent care pathway

- Focus Group 3: clinical staff working in primary and secondary urgent care settings

To enable those living in a broad range of regions across Scotland to participate, each of the 60-minute focus groups took place online using video conferencing software (Microsoft Teams) and was facilitated by an experienced qualitative researcher. The discussions were audio and visually recorded and transcribed, with the consent of the participants. Participants were given a £40 shopping voucher as a recognition and thanks for their time; in some cases, a donation was made to a charity associated with the participant’s NHS Board.

A purposive sampling approach was used to recruit staff for the focus group. Picker contacted each Board for their support in disseminating information about the focus groups with staff. Materials, including an information sheet and a social media advert were shared with key contacts at each Board to disseminate to staff, such as via internal newsletters/updates and social media channels. Any staff working in urgent and unscheduled care that were interested in taking part were asked to contact Picker directly, rather than going via their organisations, so that the contacts at the NHS Boards were not directly involved in selection.

If staff were interested in taking part in a focus group, they completed an online form which gathered information about their role (e.g. administrative, clinical, managerial), the sector of the urgent care pathway they worked in (such as NHS 24, A&E, Primary Care Out of Hours), their Health Board(s) and demographic information. This form was used to screen respondents for eligibility to participate in a discussion.

3.4.1 Approach to analysis

A thematic analysis was undertaken to analyse the data from the focus groups based on the following steps:

- Notes were made of the key messages following each of the discussions, using the transcript automatically generated at the end of the Teams call as a prompt.

- Once the audio files had been professionally transcribed, the transcripts were checked by a researcher against the audio file to amend any errors and add in any missing words not detected by the transcriber.

- The researcher then familiarised themselves with the transcripts by reading through them multiple times.

- The transcripts were uploaded to NVivo - a qualitative data analysis computer software package. By working through each transcript at a time, sections of text were highlighted and assigned to a label or ‘code’ that described its content. An inductive approach to coding was undertaken where there was no predefined code-frame; new codes were added during the process.

- Following the initial round of data coding, codes were organised into groups that were broadly related to each other under each category.

- Further iterations of coding were conducted to review the codes which included re-naming codes, combining codes and removing/re-assigning codes to certain extracts of data.

- The generated codes were used to identify key concepts and patterns in the data. This allowed the researcher to look both across and within individual cases/groups to explore themes. These formed the basis for thematic reporting with participant quotes used to demonstrate the validity of the analyses.

3.5 Analysis of existing data: Interrupted Time Series Analysis

To assess changes to key Urgent Care delivery metrics comparing pre- and post-implementation of the RUC Interrupted Time Series Analysis was conducted. This type of analysis is a field of statistics that models cause-and-effect relationships between variables of interest. It goes beyond analysis that looks at associative relationships (i.e. correlation), by evidencing how one variable (the exposure or treatment: the RUC) directly changes or affects another (the urgent care metrics). However, as it was not possible to control for several other factors, such as other national policies and the COVID-19 pandemic, that may have had an effect on urgent care at the same time as the RUC, the results of the Interrupted Time Series Analysis should be interpreted with caution.

3.5.1 Understanding the decision context

To help identify the Urgent Care delivery metrics for use in the analysis three one-to-one interviews were conducted. The interviews were conducted with representatives from NHS Scotland and the Centre for Sustainable Delivery (Chief Executive Officer of a Health Board; Strategic Lead for Medicine and Unscheduled Care Portfolio of Health Board; Senior Manager in Centre for Sustainable Delivery). The semi-structured one-to-one interviews took place through online video conferencing. The decisions context consisted of four key elements (see Section 10 for complete interview topic guide):

- What was the RUC, and how should it look in the future?

- Who was/ is involved in the development of the RUC?

- How does the decision to fund the RUC interact with other funding decisions?

- What are the anticipated delivery metrics affected by the RUC?

3.5.2 Identifying Urgent Care delivery metrics

Urgent Care delivery metrics that were likely to be affected by RUC, in the short term, were identified for monitoring and evaluation purposes of the RUC. The selection of relevant metrics was based on published literature[8], the interviews about the decision context and the availability of the data. The identified metrics were used in the Interrupted Time Series Analysis.

3.5.3 Interrupted Time Series analysis of Urgent Care delivery metrics at National and Health board level

Routinely (weekly) collected data was accessed through Public Health Scotland (PHS) to assess the change to the Urgent Care delivery metrics, comparing pre- and post- implementation of the RUC at national and Health Board level. To get access to this data, a data sharing request was submitted to and granted by PHS. Analysis was conducted first at national level to estimate the overall change to the Urgent Care delivery metrics. This was followed by analysis at the Health Board level to estimate any changes pre- and post-implementation of the RUC by Health Board.

Following guidance from the Medical Research Council, and good practice in policy evaluation, a quasi-experimental design was employed to analyse the PHS data. Quasi-experimental design - often described as non-randomised pre-post intervention studies[9] - is a method that establishes cause-and-effect relationships without relying on random assignment of participants to treatment and control groups. This approach overcomes the lack of available control group for comparison of the RUC at national level (i.e. there is no other similar national setting, with similar health systems, exposed to similar external factors, to viably compare to).

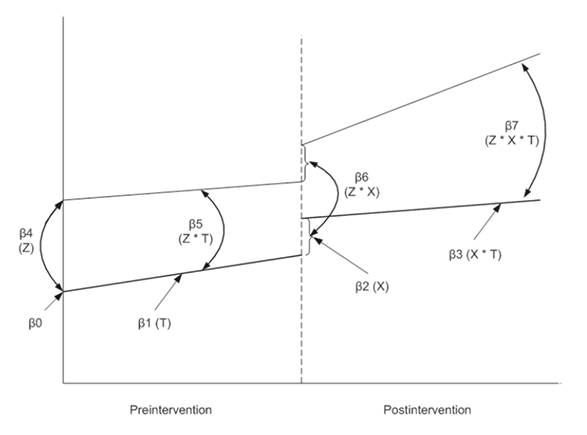

Critical consideration was given to the multitude of quasi-experimental design approaches to determine the most appropriate statistical analysis to assess changes to the Urgent Care delivery metrics comparing pre- and post-implementation of the RUC. Interrupted Time Series Analysis was selected as it analyses data over time - comparing pre- and post- trends - before and after a period of interruption (Figure 1, described below). The period of interruption (represented by the dashed line) is defined by the analyst and informed by contextual understanding of the problem (i.e. the impact of the RUC). For this analysis, the period of implementation was informed from the decision context and consultation with the Research Advisory Group. In order to allow for the period of implementation of the RUC, and the atypical effects of COVID-19, a two-year interrupted period was defined: 01 January 2020 to 01 January 2022.

To assess change in the Urgent Care delivery metrics at national level, single group Interrupted Time Series Analysis was employed (represented by the lower line only, in Figure 1). Single group Interrupted Time Series Analysis compares pre- and post- trends when there is no comparison under study. In the case of single group Interrupted Time Series Analysis, β0 represents the starting level of the metric. β1 is the slope or trajectory of the metric until the introduction of the intervention. β2 represents the change in the level of the metric that occurs immediately following the period of interruption and β3 describes the difference between the preintervention and postintervention slopes of the outcome. Thus, β2 indicates the immediate effect of the RUC (post interruption), and β3 indicates the intervention effect over time.

Next, to assess change in the Urgent Care delivery metrics at Health Board level multiple group Interrupted Time Series Analysis was conducted. Multiple group Interrupted Time Series Analysis was possible in this instance as another Health Board, or combinations of other Health Boards, may act as a control group for comparison when statistical assumptions hold (described below). For multiple group Interrupted Time Series Analysis, the lower lines in Figure 1 represent the control group, and the upper lines represent the treatment group (the treatment group being the Health Board under investigation. β4 describes the difference in the level of the delivery metric between treatment and controls prior to the intervention, β5 represents the difference in the slope (trend) of the delivery metric between treatment and controls prior to interventions, β6 describes the difference between treatment and control in level of metric immediately following period of interruption, and β7 describes the difference between treatment and control in the slope (trend) of the metric post-intervention.

A Health Board, or combination of Health Boards were chosen as controls for each other on a specific metric if they had similar initial level and trend in the pre-period (i.e. the β4 and β5 coefficients in Figure 1 were not statistically significant). The selection of control Health Board(s) was done for each Health Board and for each Urgent Care delivery metric (i.e., a Health Board could have different control Health Board(s) for two different Urgent Care delivery metrics). The following steps were followed to select comparable Health Boards for reach metric:

1. Identification of similar Health Boards through the inspection of pre-trends for a given metric.

2. Generating three groups of Health Boards with similar pre-trends based on visual inspection (i.e. small, medium and large size based on their pre-trend volume of urgent hospital admissions). Urgent hospital admissions have been used in this step as this was one of the main metrics of RUC impact.

3. Statistical testing of starting level and pre-trend slopes, comparing the treatment group to a combination of all other Health Boards. If statistical non-significance was achieved (i.e. comparable pre-trends between treatment and control Health Boards), the analysis continued with step six otherwise, with step four.

4. Statistical testing of pre-trends, comparing the treatment group to other Health Boards in a defined size group (e.g. see step 2). If statistical non-significance was achieved, the analysis continued with step six otherwise, with step five.

5. Statistical testing of pre-trends, comparing the treatment group to combinations of others Health Boards (i.e. not only within the same size group) until statistical non-significance in pre-trends was achieved.

6. Serial correlation test (i.e. Cumby-Huizinga test for autocorrelation to assess the degree of correlation of the outcome variable across successive time intervals) and adjustment for correlation (i.e. number of time lags) where required.

7. Running the Interrupted Time Series Analysis regression on all metrics using for each regression model the specification of control group and serial correlation resulted from the previous steps.

To support interpretation of the results of the Interrupted Time Series Analysis at national level, the results of the regression analysis were used to estimate annualised outcomes (i.e. volume of each outcome in a “standardised” year) before and after RUC. The concept of “standardised” year in the pre and post periods was used in order to facilitate interpretation of the results as it could be interpreted as a typical year of trends immediately before and after RUC implementation. This allows for comparisons of the Urgent Care delivery metrics based on changes in trends during the pre- and post-RUC implementation periods. Similarly, the results of the Interrupted Time Series Analysis at Health Board level were used to generate relative differences by Health Board (i.e. what was the difference between the “treatment” Health Board and its “control” group of Health Boards in relative terms).

It should be noted that it was not possible to identify a suitable ‘control’ for Greater Glasgow and Clyde given the far greater populus serviced by this Board. As such, the Health Board level Interrupted Time Series Analysis, does not provide findings for Greater Glasgow and Clyde for metrics based on absolute numbers (please see Tables 1 to 5 in section 9 of this report).

Importantly, the Interrupted Time Series Analysis attempted to assess the change to Urgent Care delivery metrics comparing time periods pre- and post-implementation of the RUC by reducing bias as much as possible. The careful specification of control Health Boards for each individual Health Board and each metric, as detailed above, was done to mitigate confounding bias in the results. Further, systemic impacts (e.g. COVID-19) were controlled for in the Interrupted Time Series Analysis, as much as possible, by deliberately excluding two-years’ worth of data between 01 January 2020 to 01 January 2022. However, it is important to note that causation of any change cannot be concluded from these findings as it is likely that it was driven by several factors, including but not limited to the introduction of the RUC. For example, the ongoing impact of the Covid-19 pandemic which is not yet quantifiable.

Contact

Email: dlhscbwsiawsiaa@gov.scot

There is a problem

Thanks for your feedback